AI at the Edge with K3s and NVIDIA Jetson Nano: Object Detection and Real-Time Video Analytics

With the advent of new and powerful GPU-capable devices, the possible use cases that we can execute at the edge are expanding. The edge is growing in size and getting more efficient as technology advances. NVIDIA, with its industry-leading GPUs, and Arm, the leading technology provider of processor IP, are making significant innovations and investments in the edge ecosystem landscape. For instance, the NVIDIA Jetson Nano is the most cost-effective device that can run GPU-enabled workloads and can handle AI/ML data processing jobs. Additionally, cloud native technologies like Kubernetes have enabled developers to build lightweight applications using containers for the edge. To enable a seamless cloud native software experience across a compute-diverse edge ecosystem, Arm has launched Project Cassini – an open, collaborative standards-based initiative. It leverages the power of these heterogenous Arm-based platforms to create a secure foundation for edge applications.

K3s, developed by Rancher Labs and now a CNCF Sandbox project, has been a key orchestration platform for these compact footprint edge devices. As a Kubernetes distro built for the edge, it is lightweight enough to not put a strain on device RAM and CPU. Taking advantage of the Kubernetes device plugin framework, the workloads running on top of these devices can access the GPU capabilities with efficiency.

In typical scenarios, the devices at the edge are used to collect the data, and analytics and decoding are performed with cloud counterparts. With the edge devices becoming more powerful, we can now perform the AI/ML processing at the edge location itself.

As highlighted by Mark Abrams from SUSE in his previous blog on how to use GPUs on Rancher Kubernetes clusters deployed on the cloud, it’s pretty seamless and efficient.

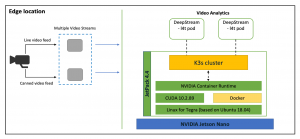

In this blog, we’ll see how a combination of NVIDIA’s Jetson Nano and K3s enables GPUs at the edge and makes a compelling platform. The figure below depicts the high-level architecture of the use case:

Object Detection and Video Analytics at the Edge

We see an edge location with a video camera connected to a Jetson Nano device. The NVIDIA Jetson Nano ships with JetPack OS – a set of libraries enabling the GPU devices.

In this setup, we have two video streams that are being passed as input to the NVIDIA DeepStream containers:

- A live video feed from the camera angled towards a parking lot

- A second video feed is a pre-built video with different types of objects – cars, bicycles, humans, etc.

- We also have a K3s cluster deployed on Jetson Nano that hosts the NVIDIA DeepStream pods. When the video streams are passed to the DeepStream pods, the analytics are done at the device itself.

- The output is then passed onto a display attached to the Jetson Nano. On the display, we can see the object classifications – cars, humans, etc.

For more details on the architecture and the demo of the use case, check out this video: Object Detection and Video Analytics at the Edge

Configurations

Prerequisites: The following components should be installed and configured

- Jetson Nano board

- Jetson OS (Tegra)

- Display attached to the Jetson Nano via HDMI

- A webcam attached to the Jetson Nano via USB

- Change Docker runtime to Nvidia runtime and install K3s

Jetson OS comes with Docker installed out of the box. We need to use the latest Docker version as it is GPU compatible. To check the default runtime, use the following command:

sudo docker info | grep Runtime

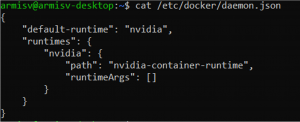

You can also see the current runtime by checking the docker daemon:

cat /etc/docker/daemon.json

Now, change the contents of the docker daemon to the following:

{

"default-runtime": "nvidia",

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime", "runtimeArgs": []

} }

}

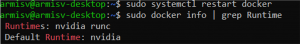

After editing the daemon.json, restart the docker service. Then you should be able to see the Nvidia default runtime.

sudo systemctl restart docker

sudo docker info | grep Runtime

Before installing K3s, please run the following commands:

sudo apt update sudo apt upgrade -y sudo apt install curl

This will make sure we are using the latest versions of the software stack.

To install K3s, use the following command

curl -sfL https://get.k3s.io/ | INSTALL_K3S_EXEC="--docker" sh -s –

To check the installed version, execute the following command

sudo kubectl version

Now, let’s create a pod with a Deepstream SDK sample container and run the sample app.

Create a pod manifest file with the text editor of your choice. Add the following content to the file:

apiVersion: v1 kind: Pod metadata: name: demo-pod labels: name: demo-pod spec: hostNetwork: true containers: - name: demo-stream image: nvcr.io/nvidia/deepstream-l4t:5.0-20.07-samples securityContext: privileged: true allowPrivilegeEscalation: true command: - sleep - "150000" workingDir: /opt/nvidia/deepstream/deepstream-5.0 volumeMounts: - mountPath: /tmp/.X11-unix/ name: x11 - mountPath: /dev/video0 name: cam volumes: - name: x11 hostPath: path: /tmp/.X11-unix/ - name: cam hostPath: path: /dev/video0

Create the pod using the YAML manifest from the previous step.

sudo kubectl apply -f pod.yaml

The pod is using the deepstream-l4t:5.0-20.07-samples container, which needs to be pulled before the container can start.

Use the sudo kubectl get pods command to check the pod status. Please wait until it’s running.

When the pod is deployed and running, sign in and unset the Display variable inside the pod by using the following commands:

sudo kubectl exec -ti demo-pod /bin/bash unset DISPLAY

The “unset DISPLAY” command should be run inside the pod.

To run the sample app inside the pod, use the following command:

deepstream-app -c /opt/nvidia/deepstream/deepstream-5.0/samples/configs/deepstream-app/source1_usb_dec_infer_resnet_int8.txt

This may take several minutes for the video stream to start.

Your video analysis app is now using the webcam input end, providing live results on the display, attached to the Jetson Nano board. To quit the app, simply press “q” within the pod.

To exit the pod, use the “exit” command.

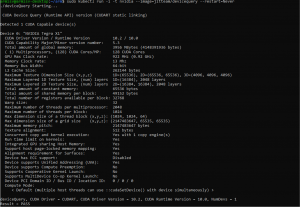

To provide the full hardware details of the Jetson Nano, run another pod with the following command:

kubectl run -i -t nvidia --image=jitteam/devicequery --restart=Never

Conclusion

As we saw in the blog, it’s pretty seamless to run AI and analytics at the edge with Arm-based NVIDIA Jetson Nano and K3s. These cost-effective devices can be quickly deployed and still provide an efficient way of performing video analytics and AI.

Feel free to reach out to us at sw-ecosystem@arm.com with your queries on running AI and analytics on Arm-Neoverse based devices.

How are you using AI on the edge? Join the conversation in the SUSE & Rancher Community.

Pranay Bakre is a Principal Solutions Engineer at Arm. He’s passionate about the infusion of cloud native technologies such as Kubernetes, Docker, etc. with Arm’s Neoverse platform. He enjoys working with partners to build solutions/demos on Arm-based cloud and edge products. He has authored multiple blogs on container orchestration and related use cases.

Related Articles

Feb 15th, 2023