How Public Cloud Adoption Enables Increased IT Automation

In today’s fast-paced digital landscape, businesses are increasingly turning to public cloud services to drive their digital transformation efforts. This shift is propelled by the public cloud’s ability to offer scalable, flexible, and cost-efficient IT resources on demand. As organizations strive to remain competitive, the agility provided by cloud services becomes not just an asset but a necessity. This transition is fundamentally changing how companies manage their IT infrastructure, making the journey towards public cloud adoption a key strategic move.

Amidst this shift, IT automation is a critical component of modern business operations. By automating routine and complex tasks, businesses can achieve greater efficiency, reduce human error, and free up valuable resources for strategic initiatives. Automation in cloud environments streamlines operations, from deploying servers to scaling applications, ensuring that IT infrastructures can rapidly adapt to changing business needs.

The convergence of public cloud adoption and IT automation opens a new realm of possibilities for business innovation and agility. This article explores how embracing public cloud services not only facilitates but also amplifies IT automation capabilities. Through real-world examples and expert insights, we’ll delve into the mechanisms by which public cloud platforms empower organizations to automate their IT operations more extensively, driving significant gains in operational efficiency, cost savings, and competitive advantage.

The Evolution of Cloud Computing

Cloud computing has undergone a remarkable evolution since its inception, transforming the way businesses deploy and manage IT resources. Initially, the concept of cloud computing emerged as a dynamic means to share computing power and data storage, eliminating the need for extensive on-premise hardware. This era saw the rise of private clouds, which offered organizations the ability to harness cloud capabilities while maintaining control over their IT environment. However, the scalability and cost-effectiveness of these private clouds were often limited by the need for substantial upfront investment and ongoing maintenance.

The advent of public cloud services marked a pivotal shift in this landscape. Giants like Amazon Web Services, Microsoft Azure, and Google Cloud Platform began offering computing resources as a service, accessible over the internet. This model democratized access to high-powered computing resources, making them available on a pay-as-you-go basis. The transition from private to public cloud services heralded a new era of IT flexibility, scalability, and efficiency.

The impact of cloud computing on IT operations has been profound. Traditional IT tasks, such as provisioning servers, scaling applications, and managing data storage, have been simplified and automated. The public cloud has introduced a level of agility previously unattainable, enabling businesses to respond to market demands and innovate at an unprecedented pace. This shift has not only reduced operational costs but also allowed IT teams to focus on strategic initiatives that drive business growth. As cloud computing continues to evolve, its role as a catalyst for IT automation and business innovation becomes increasingly evident.

Understanding IT Automation

IT automation is the use of software to create repeatable instructions and processes to replace or reduce human interaction with IT systems. It’s a cornerstone of modern IT operations, enabling businesses to streamline operations, reduce manual errors, and scale efficiently. Automation is crucial for managing complex, dynamic environments, especially in the context of cloud computing where resources can be adjusted with demand.

There are several types of IT automation, each addressing different aspects of IT operations. Infrastructure as Code (IaC) allows teams to manage and provision IT infrastructure through code, rather than manual processes, enhancing speed and consistency. Continuous Integration/Continuous Deployment (CI/CD) automates the software release process, from code update to deployment, ensuring that applications are efficiently updated and maintained. Automated monitoring tools proactively track system health, performance, and security, alerting teams to issues before they impact operations.

The benefits of IT automation are multifaceted. It significantly reduces the time and cost associated with manual IT management, increases operational efficiency, and minimizes the risk of human error. For businesses, this means faster time-to-market for new features or products, improved service reliability, and the ability to allocate more resources towards innovation rather than maintenance. As such, IT automation is not just a technical improvement but a strategic asset that drives competitive advantage.

How Public Cloud Services Facilitate IT Automation

Public cloud services have emerged as a catalyst for IT automation, offering tools and features that significantly enhance the efficiency and agility of IT operations.

Scalability and Flexibility

One of the most compelling attributes of public cloud platforms is their automated scaling features. These platforms can automatically adjust computing resources based on real-time demand, ensuring that applications always have the necessary resources without manual intervention. This scalability not only optimizes cost but also supports uninterrupted service delivery.

The flexibility in resource allocation provided by public clouds further supports automation. IT teams can dynamically provision and decommission resources through automated scripts or templates, significantly reducing the time and complexity involved in managing IT infrastructure.

Advanced Tools and Services

Public cloud providers offer a suite of advanced tools for automation, such as AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager. These tools allow organizations to define and deploy IaC, automating the setup and management of cloud environments.

Moreover, public clouds feature robust integration capabilities with third-party automation tools. Whether it’s integrating with CI/CD pipelines for software deployment or leveraging specialized monitoring and management tools, the public cloud ecosystem is designed to support extensive automation strategies.

Public cloud services enable businesses to significantly enhance their IT automation capabilities through these mechanisms. By leveraging scalable resources, flexible management options, and comprehensive toolsets, organizations can automate a wide range of IT operations, from infrastructure provisioning to application deployment and monitoring, driving greater operational efficiency and innovation.

Cost Efficiency and Optimization

Public cloud services inherently promote cost efficiency by reducing the need for manual intervention in IT operations. Automation capabilities built into these platforms allow for the dynamic allocation and scaling of resources based on demand, eliminating overspending on underutilized resources. Through automated resource management, businesses can optimize their spending by ensuring that they only pay for the resources they use.

Examples of cost optimization include automated scaling during peak usage times to maintain performance without permanent investment in high-capacity infrastructure, and automated shutdown of resources during off-peak hours to save costs. Additionally, automated backup and data lifecycle policies help in managing storage costs efficiently. These automated processes ensure that businesses can maintain optimal service levels while minimizing expenses, showcasing the financial advantage of leveraging public cloud services for IT automation.

Overcoming Challenges in Cloud-Based IT Automation

While cloud-based IT automation offers myriad benefits, it also presents specific challenges that businesses must navigate. Two of the most significant hurdles are ensuring security and compliance and managing the complexity of automation workflows. By addressing these challenges effectively, organizations can harness the full potential of cloud-based IT automation.

Security and Compliance

Addressing Security Concerns with Automated Policies: Security in the cloud is paramount, especially when automation tools are implemented. Automated security policies enable organizations to consistently enforce security standards across their cloud environments. These policies can automatically detect and remediate non-compliant configurations or suspicious activities, ensuring a proactive approach to cloud security.

Ensuring Compliance in an Automated Public Cloud Environment: Compliance in an automated setting requires a structured approach to manage and monitor the cloud infrastructure. Utilizing cloud management platforms that offer built-in compliance frameworks can significantly ease this burden. These tools not only automate compliance checks but also provide detailed reports for auditing purposes, ensuring that businesses meet regulatory standards effortlessly.

Managing Complexity: Strategies for Simplifying Automation Workflows

As IT environments become increasingly complex, simplifying automation workflows is essential. One effective strategy is adopting a modular approach to automation, where workflows are broken down into smaller, manageable components. This not only makes the automation process more manageable but also enhances flexibility and scalability.

Tools and Best Practices for Managing Automated Systems

Leveraging the right tools is crucial for managing automated systems efficiently. Tools that offer visual workflow designers, integration capabilities, and scalable architectures can significantly reduce the complexity of automation. Additionally, adhering to best practices such as continuous monitoring, regular updates, and thorough testing of automation scripts ensures the smooth functioning of automated systems.

By tackling these challenges head-on, businesses can secure and streamline their cloud-based IT automation efforts, leading to enhanced operational efficiency and agility.

Conclusion

The exploration of cloud computing’s evolution and the strategic integration of IT automation has underscored the immense benefits that public cloud services offer to today’s enterprises. By harnessing the scalability, cost-effectiveness, and rapid innovation that public cloud platforms provide, organizations can significantly enhance their IT automation efforts. This leads to remarkable improvements in operational efficiency and business agility. Looking ahead, the synergy between IT automation and cloud computing is poised to be a cornerstone of business innovation, unlocking new avenues for growth and competitiveness.

Despite the challenges that may arise, the path to adopting public cloud services has been made smoother by the availability of robust strategies and tools. We are at the cusp of a technological transformation that will redefine the paradigms of IT operations and infrastructure management. In this pivotal moment, SUSE stands ready to guide businesses through their cloud journey with cutting-edge Linux products and open source solutions designed for seamless public cloud integration and efficient IT automation.

SUSE encourages businesses to leverage public cloud solutions to bolster their IT automation capabilities. With our expertise and innovative solutions, companies can not only navigate the complexities of cloud adoption but also harness the full potential of cloud computing and automation. Partner with SUSE to future-proof your business, ensuring you are well-equipped to thrive in the ever-evolving digital landscape.

Frequently Asked Questions (FAQ)

What Is Public Cloud Adoption?

Public cloud adoption refers to the process by which organizations transition their IT resources, applications, and operational processes to cloud services that are managed and provided by third-party companies. This move is driven by the desire to enhance flexibility, scalability, and cost-efficiency. Unlike private clouds, which are dedicated to a single organization, public clouds serve a multitude of clients, offering resources like servers and storage over the Internet. This model allows businesses to avoid the upfront cost and complexity of owning and maintaining their own IT infrastructure.

How Does Public Cloud Adoption Enhance IT Automation?

Public cloud adoption significantly enhances IT automation by providing scalable resources, advanced toolsets, and comprehensive managed services. These features facilitate the automatic scaling of resources to meet demand, streamline software deployment processes, and manage routine tasks such as backups, updates, and security checks with minimal human intervention. The inherent flexibility and breadth of services offered by public clouds enable organizations to automate their IT operations more effectively, leading to increased efficiency and reduced operational costs.

What Are the Key Benefits of IT Automation for Businesses?

The key benefits of IT automation for businesses include enhanced efficiency, reduced operational costs, improved reliability, and the ability to deploy services and applications faster. Automation reduces the need for manual intervention in routine tasks, thereby minimizing the risk of human error and ensuring operations run smoothly and consistently. It also enables organizations to respond more quickly to market changes and customer needs by facilitating rapid deployment of resources and applications.

Can Small Businesses Benefit from Public Cloud and IT Automation?

Absolutely. Small businesses stand to gain significantly from public cloud and IT automation. The scalability of cloud solutions means that businesses only pay for the resources they use, which can be scaled up or down based on demand. This flexibility makes cloud services and automation highly cost-effective, even for small enterprises, allowing them to leverage advanced technologies that were previously accessible only to larger organizations. Automation can further reduce operational costs by minimizing manual tasks, allowing small business owners to focus more on strategic growth areas.

How Do Public Cloud Services Ensure Security and Compliance in Automated Environments?

Public cloud providers invest heavily in security measures and compliance standards to protect data and ensure privacy in automated workflows. These measures include physical security controls at data centers, encryption of data in transit and at rest, and sophisticated access control mechanisms. Additionally, public clouds often comply with a broad range of international and industry-specific regulations, offering businesses peace of mind that their data handling practices are in line with legal requirements.

What Are Some Common Challenges in Implementing IT Automation via Public Cloud?

Common challenges in implementing IT automation via the public cloud include navigating the complexity of cloud services, bridging skill gaps within the organization, and addressing security concerns. Organizations may struggle with selecting the right tools and services that match their specific needs or integrating new cloud services with existing infrastructure. To overcome these challenges, businesses can invest in training for their staff, seek guidance from cloud consultants, and implement robust security practices and tools designed for cloud environments.

How Can Companies Get Started with Public Cloud Adoption and IT Automation?

Companies can start with public cloud adoption and IT automation by first assessing their business needs and identifying which processes and workloads could benefit most from moving to the cloud. The next step involves selecting the right cloud provider that aligns with their requirements in terms of services, security, and compliance. Businesses should then start small, moving a single workload or process to the cloud to gain familiarity with the environment before gradually implementing automation tools and practices across their operations.

Are There Any Industry-Specific Considerations for Public Cloud Adoption and IT Automation?

Yes, there are industry-specific considerations for public cloud adoption and IT automation. Regulatory compliance, data sensitivity, and specific operational needs vary significantly across sectors. For instance, healthcare organizations must ensure their cloud services comply with HIPAA regulations, while financial services firms have to meet strict data security and privacy standards. Understanding these nuances and selecting cloud services that offer the necessary controls and compliance certifications is crucial for successful adoption in any industry.

What Is the Future of Public Cloud and IT Automation?

The future of public cloud and IT automation is likely to be shaped by the integration of artificial intelligence (AI) and machine learning, the rise of serverless computing, and an increased focus on sustainability. AI and machine learning are set to automate even more complex tasks and decision-making processes, while serverless computing will allow businesses to run applications without managing the underlying servers, further reducing costs and operational overhead. Additionally, the cloud industry is moving towards greener practices, with providers focusing on reducing energy consumption and utilizing renewable energy sources.

How Does SUSE Support Public Cloud Adoption and IT Automation?

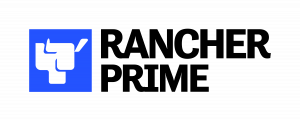

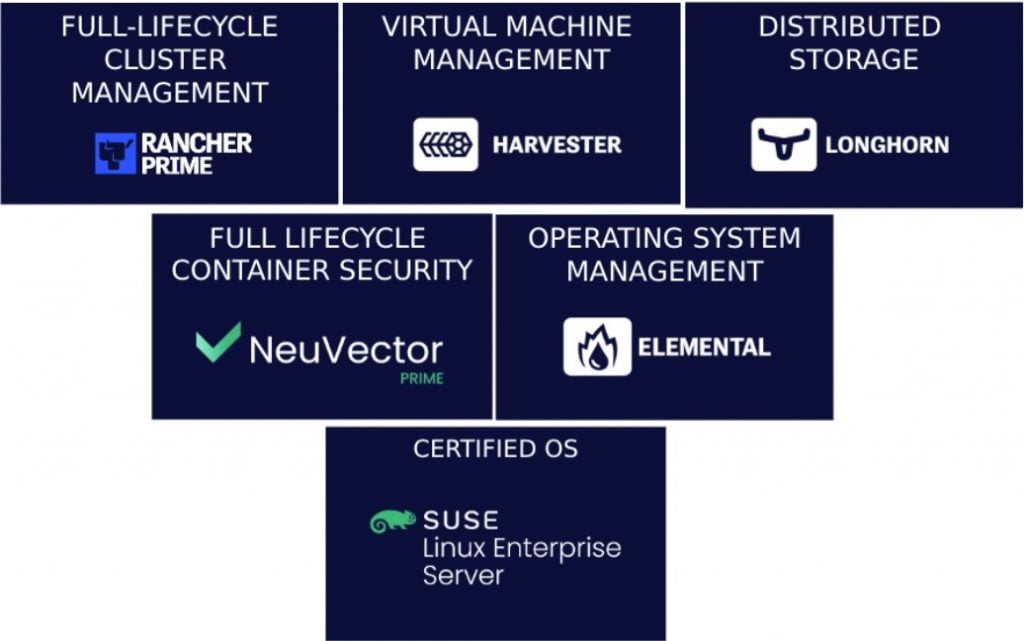

SUSE offers a range of solutions and services designed to facilitate easy and secure public cloud adoption and enhance IT automation capabilities for businesses. These include scalable Linux operating systems, Kubernetes management platforms such as Rancher for container orchestration, and tools for cloud-native application development. SUSE’s solutions are designed to be open and interoperable, supporting a variety of cloud providers and ensuring that businesses can leverage the full benefits of public cloud and automation without being locked into a single vendor.

In today’s fast-paced digital landscape, managing containerized workloads securely and efficiently is paramount for businesses aiming to stay ahead. To stay ahead, Platform Engineering Teams really do need a best friend.

In today’s fast-paced digital landscape, managing containerized workloads securely and efficiently is paramount for businesses aiming to stay ahead. To stay ahead, Platform Engineering Teams really do need a best friend.

Many industries have computers in their stores where there is only ever an active/passive set up.

Many industries have computers in their stores where there is only ever an active/passive set up.