Whether you’re focusing on cutting maintenance, electricity and storage costs, increasing reliability or doing your part to reduce climate impact, there are countless reasons organizations are looking to escalate their cloud migration as fast as they can. Cloud computing is probably the most significant driver of digital transformation over the last decade.

What are the Benefits of Cloud Computing?

So, we all start with the same baseline knowledge, just what is cloud computing? Simply put, it’s on-demand computer system resources — anything from data storage to compute power. Over the last 15 years, we’ve seen a growing trend of organizations moving from building, securing and maintaining their own on-premise data centers — at an exorbitant cost — to “time-sharing” data centers via the internet.

In a nutshell, cloud computing is a function. The “cloud” is an esoteric name for the environments where applications and workloads run. And its purpose is to enable developers to build and run applications in a way that they can deliver value faster.

Did you know that 90 percent of all data has been created in the last two years? More data means more storage. And certainly, always-on consumers demand “five-nines” or 99.999 percent uptime. Outsourcing the management of your data storage and processing dramatically increases your reliability at a lower cost.

But the benefits of cloud computing don’t just stop there.

Cloud computing is flexible, allowing companies to scale up and down in response to demand. This means you pay as you go, controlling not only uptime but costs. And because there are more than 800 cloud providers across dozens of regions, you gain even more reliability. Let’s say there’s a power failure in one region. Your cloud instance will failover to another region if you pay for multi-region support. Here’s the alternative: imagine if all your servers were located in one region or zone that you control and there was a natural disaster or human-made security breach. This could take down even the most established businesses.

Moreover, according to Salesforce, just by switching to the cloud, 94 percent of businesses saw a security improvement, while 91 percent said it made for easier governance and compliance. After all, it’s safer and easier to manage access control remotely instead of issuing snatchable hardware.

There are also many business benefits to cloud computing. The most obvious is CAPEX cost savings. With the cloud, businesses see anywhere between 30 and 50 percent savings. After all, you’re sharing the cost of the data center, staff, electricity, maintenance and more with many other organizations.

Cloud computing also is proven to increase collaboration across silos and devices and with external partners to release features faster and stay more competitive. In a year like 2020, with a sudden move to a mostly remote-first world, the cloud was essential not just for collaboration but keeping businesses up and running.

Plus, data centers aren’t just about the hardware — they are mostly about the data. By having an external cloud provider — or multiple — managing your data storage and computing, you also leverage their cloud analytics to gain faster access to data-backed insights and actions.

Finally, no matter what, things break, even when everything is under your control. Cloud computing delivers faster recovery times and multi-site availability at a fraction of the cost of conventional disaster recovery.

For all these reasons, spending on public cloud IT infrastructure surpassed spending on traditional IT infrastructure for the first time in 2020, according to the International Data Corporation.

With all this in mind, here’s our guide to cloud computing, to help give your organization that edge — perhaps literally — in 2021.

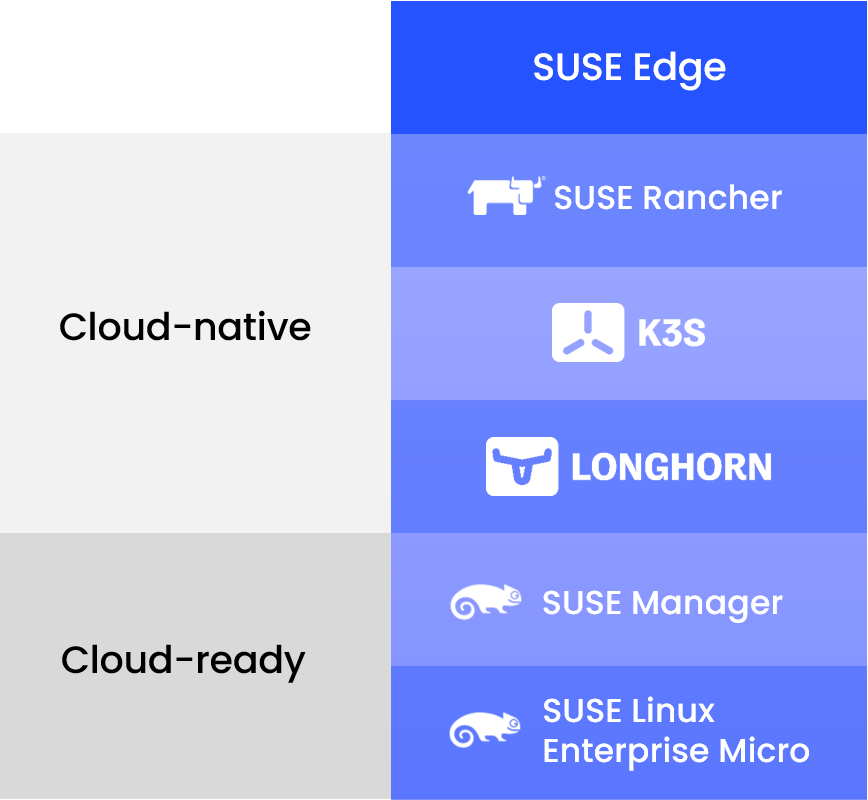

Becoming Cloud Native in 2021

What is cloud native other than a buzzword? It’s the mentality, methodology and technology that exploits all these benefits of cloud computing. Some of the most successful companies nowadays were barely heard of a decade ago — Netflix, Uber, WeChat, Pinterest. Each of these has leveraged the cloud from the start to create distributed, flexible, scalable, insight-driven, agile organizations that deploy hundreds of times a day.

Other very agile companies, like Spotify and GE, while having begun with monolithic architectures, have moved increasingly cloud native.

Some companies have moved back and forth over the last decade or have chosen the public cloud for any greenfield applications, but then will go either private cloud or old-school, on-premise for legacy architecture that they are keeping.

Cloud native comes with both a technical focus — cue in more buzzwords like microservices and Kubernetes — and a cultural one — emphasizing a great degree of autonomy. Following Conway’s Law, decentralized cloud-native architecture is usually a reflection of a decentralized workflow that’s committed to continuous delivery and product ownership.

SUSE CTO of Enterprise Cloud Products Rob Knight says cloud native is “really is a paradigm shift in not only how we develop applications and workloads, but how do businesses function in their entirety.”

Rob Knight, CTO of Enterprise Cloud Products, SUSE

The trend in continuous delivery sees IT becoming the core of all businesses.

Knight continues, “What is cloud computing? It’s about abstracting and pulling infrastructure and hardware and presenting that in an easier-to-use way.”

For Knight, a cloud native world not only puts digital at the center of any business transformation but allows more and more people inside and outside of IT to take part in that discussion. By bringing the traditional bottlenecks of compliance and security to the table earlier on, cloud becomes an easier conduit for both safer systems and smoother collaboration.

What is the Public Cloud?

While analysts expected the public cloud to do very well in 2020, its ability to quickly scale up to support businesses saw it skyrocket beyond all expectations. Not surprisingly, this was especially true in the second quarter, when everything from conferences to weddings to school shifted online.

Public cloud has a slightly unnerving name, but it doesn’t mean shared data. It means a third-party provider — predominantly Amazon Web Services (AWS), Microsoft Azure or Google Cloud Platform (GCP) — offers cloud services, on-demand, either over the public internet or a dedicated network.

Usually, it’s done with a single tenancy, which means a single instance like a server, available per customer, which brings more security reassurances. However, because it saves on financial and environmental impact, multi-tenant public cloud computing is increasingly used for staging, testing and development. It’s also popular for massive machine learning and quantum computing workloads.

The public cloud is popular with distributed international companies because the leading cloud providers can guarantee your uptime across the globe. Since it’s pay-as-you-go, the public cloud responds well to unpredictable customer usage and scalability. Because you’re pooling resources in the public cloud, many see it as a cheaper option than others that we’ll address below. In theory, you only pay for what you use, but the main cloud providers also offer their excess compute at a discount. The risk of the public cloud and especially of using this on-sale excess compute is that it could run out.

You could also find yourself locked into one vendor without a key.

One way around this is to use a Kubernetes management platform like Rancher to offer you more control over your public cloud and the opportunity to go multi-vendor. The goal is to make sure you can work with any cloud provider so you can move when you want to.

What is a Private Cloud?

Last spring’s growth wasn’t just in the public cloud. A slower but significant year-over-year growth saw $5 billion spent on enterprise on-premise private cloud.

Sometimes called an internal cloud or a corporate cloud, these are private cloud environments dedicated to one user, usually within their firewall. Private cloud can be managed on-premise, but nowadays, like the public cloud, it is usually run at vendor-operated data centers, which offer a private cloud for a single customer with isolated access. The big names here are often associated with traditional IT infrastructure and hardware, including Dell, Hewlett Packard Enterprise, VMware, Oracle, Cisco, IBM and Microsoft.

The private cloud still brings the competitive advantage of cloud computing, but it comes with some drawbacks.

Private cloud definitely plays a valuable role in many cloud strategies. Unless you are leveraging synthetic data or data masking, data protection laws make it challenging to put personally identifiable information onto a public cloud. And the private cloud certainly makes sense if you have a stable demand and predictable scalability. There are also sensible use cases, like at a factory where you may have a specific locational focus. Of course, you may want to then share the insights from that location via the public cloud, making a hybrid or multi-cloud model more attractive.

Understanding Hybrid Cloud Versus Multi-Cloud

Just like the car, the hybrid cloud is an increasingly popular option because it brings the best of both private and public cloud. It allows sensitive customer data to remain on your private cloud but enables autoscaling to the public cloud if there’s a sudden traffic spike. This is called cloud bursting.

Here private clouds connect to the public cloud, usually with multiple service providers. This avoids vendor lock-in, controlling cost and maintaining flexibility.

Hybrid is still a growing trend, so there’s no one way to run it. Some companies will run the front-end of their websites and apps in the public cloud through a firewall. Other orgs want their resilience on-premise in case they ever want to move workloads, in case something goes down in the public cloud or they just want to change providers.

Leveraging the hybrid cloud allows for a broader set of skills on a DevOps team. You can employ some teammates that really can deep-dive into the niches of maintaining infrastructure, while others can just deploy to the public cloud without having to have experience with a specific provider.

There’s a similar but nuanced option of the multi-cloud. Like hybrid, multi-cloud allows for different providers but has a mix of on-premise or private cloud.

Multi-cloud is common in retail and e-commerce when they need massive scalability around the holidays but don’t want their customer database in the public cloud. U.S. retail giant Walmart does what they refer to as a “hybrid multi-cloud fog approach.” They have a private cloud at each physical store and manage some workloads in the public cloud backed by multiple providers.

Which Cloud Strategy is Better?

Cloud strategy is much more of an operations, security and fiduciary concern. In the end, your developers aren’t so fussed about what type of cloud they are working with as long as they have uptime. Devs just need frameworks in place to get coding and a place to push their apps to. Irrespective of cloud strategy, your development team just needs the assurance that the platform team can provide a platform that abstracts the infrastructure and pushes it to the cloud.

So no cloud strategy is better than another. That’s why organizations are increasingly choosing hybrid and other flexible approaches as they transition from traditional architecture and experiment with what works best for their teams.

Who Manages What: A Look at SaaS, PaaS, Faas, IaaS and FaaS

Cloud computing includes four types of services that can run on public, private or hybrid cloud: SaaS, FaaS, PaaS and IaaS. The difference among these comes down to who is responsible for what — the organizations that own the data or the cloud providers that store it. And this depends on the available talent, cash and desire to own your own.

Software as a Service (SaaS)

SaaS is often referred to just as business software. Salesforce is the old standard in this grouping, but Zoom, Dropbox, Office 365 and the Google App Suite are just as omnipresent from startups through enterprises. It’s a fully managed experience in the cloud, sitting at the top of your software stack. You just use the tools; they run every aspect of it.

Platform as a Service (PaaS)

With PaaS, the cloud customer manages applications and middleware, while the cloud provider handles the virtualization, data, networking, runtime, services, and storage. PaaS offers a complete web development environment and is typically hardware agnostic.

Perhaps most importantly, PaaS abstracts and automates out some of the significant complexity that comes with Kubernetes. In this way, it also cuts out the cost of maintaining all of the above, including needing to recruit highly specialized DevOps architect talent.

Teams still get a sense of control, while the cloud provider is responsible for security automation and autoscaling. PaaS takes away these administrative worries so teams can just focus on delivering that business value.

Infrastructure as a Service (IaaS)

IaaS and public cloud are rather synonymous. This is when an organization still maintains control of most of its build but leaves the servers, uptime and data storage to the cloud providers.

Function as a Service (FaaS) or Serverless Compute

Netflix, while managing hundreds of services in an agile and seemingly autonomous fashion, famously offers guardrails. Context, not control. Because with increasing autonomy comes increasing complexity — of systems and people. Netflix leadership provides a pathway and guidance while enabling the optimal amount of trust in its developers.

Essentially, over the last 20 years, the pendulum has swung so far from Waterfall quarterly releases on legacy architecture all the way left to individuals releasing multiple times a day. We are now witnessing a trending swing toward a middle ground.

A function is a bit like a miniservice or even macroservice. These small units of code are designed to do only one thing, usually acting on an event. A bit smaller than a nanoservice, microservice or container, they are the smallest unit of execution in wide use today.

FaaS or serverless comes with an understanding that you need flexibility, but also, if it ain’t broke, don’t fix it. A company like new bank Monzo has thousands of functions and microservices in the cloud — choosing to use the best approach for each use case. Sometimes you’ll want to abstract that underlying infrastructure in a PaaS model, but other times you will still want access to the base infrastructure.

FaaS is built on event-driven architecture — if x, then y. Event-driven architecture is part of the broader industry trend of separating components and streamlining processes so that releases are faster and organized around end-user activity. It’s all about chaining together functions or services to better serve business needs by publishing, listening to and reacting to events.

In a FaaS setup, you upload your function code and attach an event source to it. Then the cloud provider ensures that your functions will always be available. It autoscales with stateless, ephemeral functions.

Serverless typically comes with the lowest runtime costs and, since it shuts down servers when not in use, it’s also the best for the environment. It allows for speedy development and rapid autoscaling.

It’s still pay-as-you-go along with the other general cloud computing benefits, and it allows for clear code-base separation. The downside is that unless you use an abstraction tool like Rancher, you can get trapped into vendor lock-in again.

Where is the Cloud Headed in 2021?

Knight predicts there will be a consolidation of developer tooling this year. FaaS and serverless will fulfill this need to balance flexibility with simplicity and take the next step to bring developers and operations together while not tying down teams to a specific cloud provider.

Teams, like clouds, will embrace hybrid collaborative approaches that bring all stakeholders to the table earlier on. And security will become an essential part of every step.

Here’s to a great year in cloud computing and digital transformation!

Jennifer uses storytelling, writing, marketing, podcast hosting, public speaking and branding to bridge the gaps across tech, business and culture. Because if we’re building the future, we need to think more about that future we’re building.

We’re sitting in March 2021, 1 year after COVID completely changed our lives, but also accelerated the move to Cloud for many enterprises and government agencies. Looking at a lot of industry surveys, many of you are no longer asking the question. The Cloud? It’s a done deal.

We’re sitting in March 2021, 1 year after COVID completely changed our lives, but also accelerated the move to Cloud for many enterprises and government agencies. Looking at a lot of industry surveys, many of you are no longer asking the question. The Cloud? It’s a done deal.

Throughout his career, Dylen Turnbull has worked for several companies Symantec, Veritas, F5 Networks, and now

Throughout his career, Dylen Turnbull has worked for several companies Symantec, Veritas, F5 Networks, and now