Advanced SAP Functionality Via a Container

Christian Holsing, Principal Product Manager, SAP on SUSE coauthored this post.

SAP and SUSE have a long-standing partnership. We’re excited about a recent collaboration: SAP and SUSE have implemented a way to bundle a software development toolkit (SDK) for complex SAP management software inside a containerized image that can be quickly and easily deployed into almost any environment without regard to resource constraints.

SAP’s enterprise resource planning (ERP) software is one of the most ubiquitous products in the large-enterprise environment. SAP created the high-level Advanced Business Application Programming (ABAP) language, which coders use to improve SAP-based applications. ABAP is simple and easy to learn, allowing coders to choose from procedural and object-oriented programming.

As a result, an entire ecosystem of products, services and programming communities (including the ABAP users group) has grown over the years to enhance SAP functionality and allow organizations to customize their operations. For example, the SAP R/3 system is a business software package designed to integrate all areas of a business to provide end-to-end solutions for financials, manufacturing, logistics, distribution and many other areas.

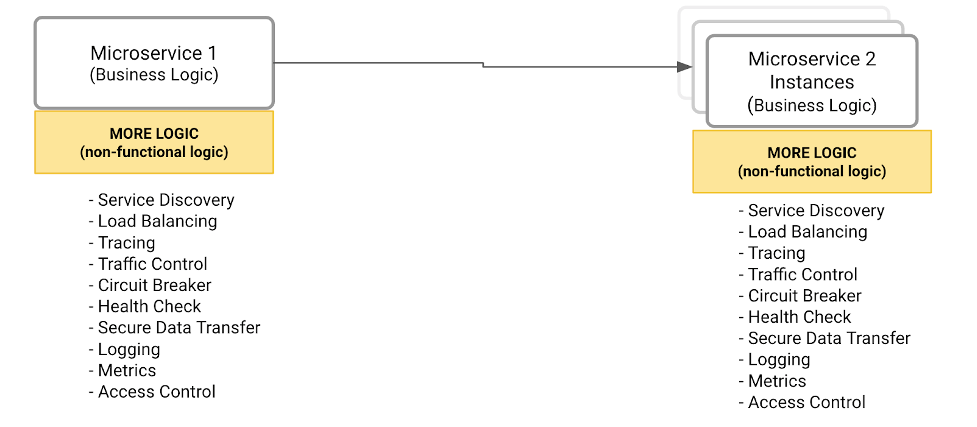

However, integrating any ERP tool or platform is a significant endeavor that requires a lot of setup and configuration time, not to mention heavy computing resources. With an ERP like SAP’s, with maximum flexibility, expandability and third-party integration opportunities, the need to deploy it as part of a major installation effort is almost a foregone conclusion.

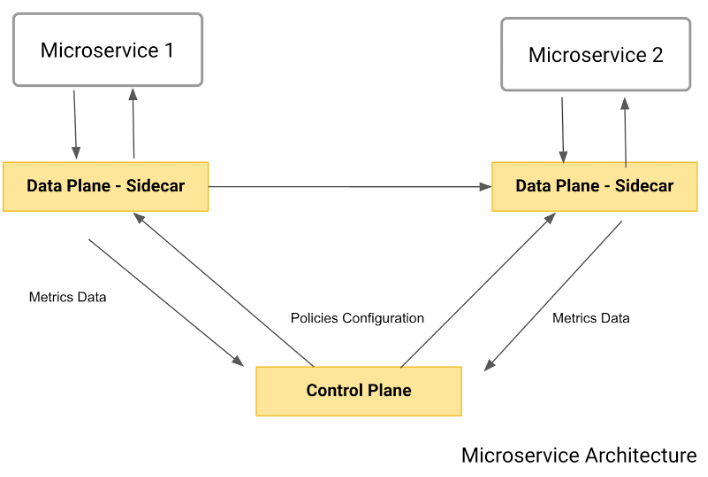

At the same time, the emergence of cloud computing as the primary approach to large-scale and far-flung compute requirements, such as those found in our customer base, has fostered the adoption of containerization as a way to allow applications to be deployed reliably and speedily between different compute environments.

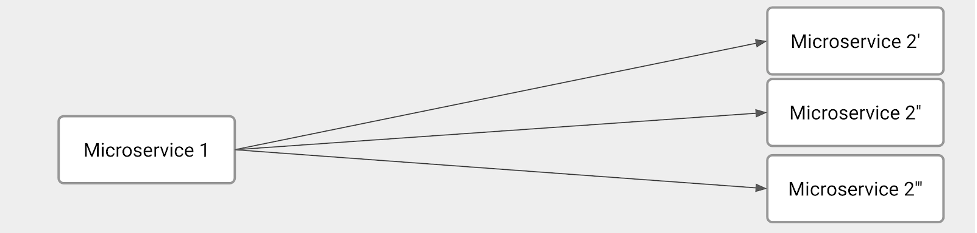

Containerization continues to gain popularity with many large enterprises, where thousands of new containers can be deployed every day. Compared with virtual machines, containers are extremely lightweight. Rather than virtualizing all of the hardware resources and running a completely independent operating system within that environment, containers use the host system’s kernel and run as compartmentalized processes within that OS.

In a container, all of the code, configuration settings and dependencies for a program are packed into a single object called an image. It makes for great functionality and easy deployment. But nobody ever thought bundling something as advanced and complex as SAP functionality into a container was possible.

Containerizing ABAP Platform, Developer Edition

So, what did we do? We’ve containerized the SDK (ABAP Platform, Developer Edition) for access through Docker Hub, making it the first official image with ABAP Platform from SAP. The great thing about the new container is that it sidesteps most of the memory requirements that made us think that such an effort was unlikely. Let’s say you are writing an application for SAP S/4HANA. It requires a minimum 128 GB RAM – and recommends 256 GB RAM – and at least 500 GB disk space. If you wanted to learn how to write extensions for SAP HANA, you either had to buy a huge system or you were out of luck. Now, with the Docker image, you can download the ABAP Platform and learn how to extend SAP S/4HANA on a more lightweight system: the image requires just 16 GB RAM and 170 GB disk space.

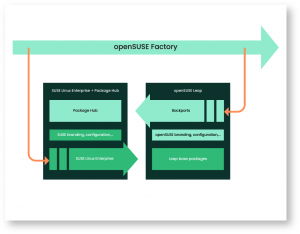

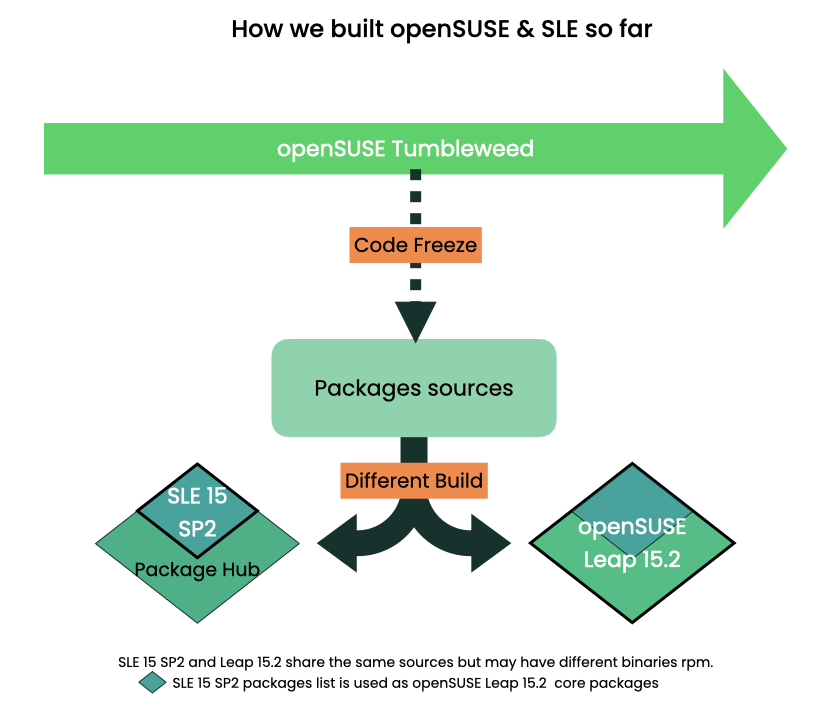

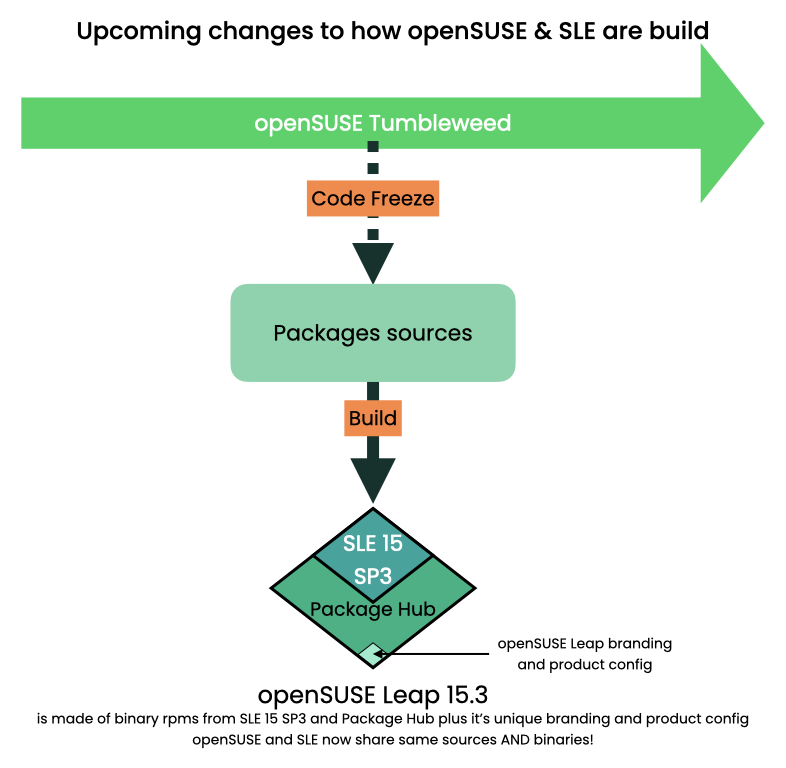

Creating the ABAP Platform image required downloading the base image containing SUSE Linux Enterprise Server 12 Service Pack 5. Then we pulled the image onto a local machine, created a container from that image and installed ABAP Platform into the container. The final step was to commit the container as a new image and push it to Docker Hub. Now, ABAP developers can pull the image – which includes the SUSE base image — onto their machines for testing, learning or even development purposes.

There were some hurdles along the way – we had to work out licensing terms between our two companies and create a limited user license for the Docker image. But we all agreed that providing an accessible SDK for experimenting was worth the effort.

The APAP user community (ABAPers) doesn’t want to worry about infrastructure. They just want to build useful business applications quickly. So, putting the ABAP Platform on Docker allows coders to see how easy it is to use. The resulting ABAP Platform image allows coders to experiment with its tools, whether on a Linux, Windows or Mac platform. We can’t wait for you to try it out.

What’s in the Image?

The SDK includes:

- Extended Program Check, which goes beyond looking for syntax errors, undertaking more time-consuming checks, such as validating method calls with regard to called interfaces or finding unused variables

- Code Inspector, which automates mass testing, provides analysis and tips for improving potentially sub-optimal statements or potential security problems, among other tasks

- ABAP Test Cockpit, a new ABAP check toolset that allows running static checks and unit tests for ABAP programs

- A new ABAP Debugger, which is the default tool for SAP NetWeaver 7.0, enables analysis of all types of ABAP programs, with a state-of-the-art user interface and its own set of essential features and tools

We think creating SDK containers makes a lot of sense. By disconnecting the SDK from the actual system, we’re letting coders experiment with these tools. Containerizing it and putting it on Docker Hub and other hubs just makes it faster and easier for developers to get their hands on the tools and start building.

Ready to try it out? Visit Docker Hub.