“Only SUSE Manager combines software content lifecycle management (CLM) with a centrally staged repository and class-leading configuration management and automation, plus optional state of the art monitoring capabilities, for all major Linux distributions.”

These days, IT departments manage highly dynamic and heterogeneous networks under constantly changing requirements. One important trend that has contributed to the growing complexity is the rise of software-defined infrastructures (SDIs). An SDI consists of a single pool of virtual resources that system administrators can manage efficiently and always in the same way, regardless of whether the resources reside on premise or in the cloud. SUSE Manager is a powerful tool that brings the promise of SDI to Linux server management.

You can use SUSE Manager to manage a diverse pool of Linux systems through their complete lifecycle, including deployment, configuration, auditing and software management. This paper highlights some of the benefits of SUSE Manager and describes how SUSE Manager stacks up against other open source management solutions.

Introducing SUSE Manager

SUSE Manager is a single tool that allows IT staff to provision, configure, manage, update and monitor all the Linux systems on the network in the same way, regardless of how and where they are deployed. From remote installation to cloud orchestration, automatic updates, custom configuration, performance monitoring, compliance and security audits, SUSE Manager 4 deftly handles the full lifecycle of registered Linux clients.

A clean and efficient web interface (or, an equivalent command-line interface) provides a single entry point to all management actions, saving time and allowing a single admin to manage a greater share of network resources.

Discovering SUSE Manager

The diversity of Linux systems can add complexity to the management environment. Time spent managing a large, complex Linux estate with dissimilar tools adds significantly to costs. Your IT staff can be much more efficient with a single tool to automate, coordinate and monitor Linux operations.

SUSE Manager provides unified management of Linux systems regardless of whether the system is running on bare metal, a virtual machine (VM) or a container environment in a server room, private cloud or public cloud. SUSE Manager will even manage Linux systems running on IoT devices, including legacy devices where agents cannot be installed. The ZeroMQ protocol provides parallel communication with client systems, which scales much more efficiently than alternatives that talk to each client one at a time.

SUSE Manager is tightly integrated with SUSE Linux Enterprise, but not limited to it. Previous releases of SUSE Manager could already administer Red Hat, CentOS, OEL and other RPM-based systems. Version 4 adds to the list openSUSE and Ubuntu clients, with Debian coming soon. The SUSE Manager client-side agent is written in Python and is therefore portable. Accompanying APIs allow easy integration with third-party tools, as well as fast, risk-free deployments of complex services. SUSE Manager version 4 includes new tools that make it easier to install and configure both high availability clusters1 and SAP HANA (High-Performance Analytic Appliance) nodes.

SUSE Manager consolidates all the following management tasks into a single tool:

• Deployment – declare how many Linux systems you need and what you need them for, and SUSE Manager does the rest. Admins can build their own ISO images for bare metal, containers or VMs, using either AutoYaST or Kickstart, and installation can be in attended or fully unattended fashion. Integration with the Cobbler installation server allows efficient deployment using Pre-Execution Environment (PXE).

• Software updates – SUSE Manager automates software updates for whole systems or individual packages. A powerful security system guarantees that every package is centrally authorized. You can schedule and execute multiple software updates at once, using one command.

• Configuration management – SUSE Manager supports file-based configuration, as well as state-based configuration management using Salt. The configuration and provisioning tools included with SUSE Manager enable you to define system prototypes and then adapt prototype definitions for easy automation and complex environments.

• Content Lifecycle Management (CLM) – The new CLM interface in SUSE Manager 4 (Figure 1) makes it easier and less expensive to manage software applications and services throughout the DevOps cycle. CLM lets you select and customize software channels, test them and promote them through the stages of the package lifecycle. Promoting an existing channel (rather than rebuilding it) as a package moves from QA to production saves time and adds convenience for IT staff.

• Security – SUSE Manager supports automatic, system-wide configuration and vulnerability scans, using either CVE lists or the OpenSCAP protocol.

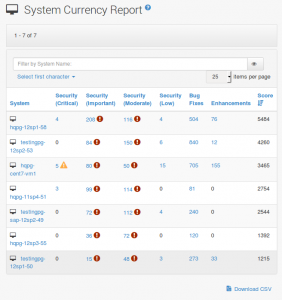

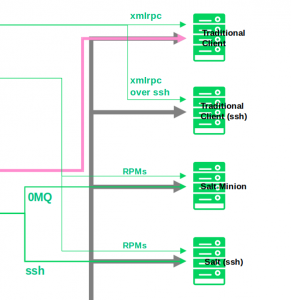

• Performance and compliance monitoring – SUSE Manager creates a unified inventory of all systems within the organization, reporting (Figure 2) on any deviation from configuration or security requirements and eliminating “shadow IT” activities from uncontrolled or undocumented systems. An optional add-on for version 4 implements a monitoring and alerting system built on the next generation of Prometheus-based monitoring tools to gain insights and reduce down time.

Figure 1: The CLM interface moves services from testing to production hosts with a few clicks.

Figure 2 Complete inventory and status of all systems, in one efficient interface.

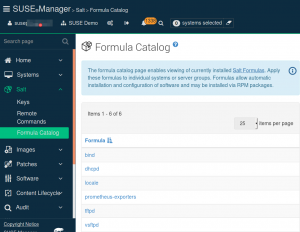

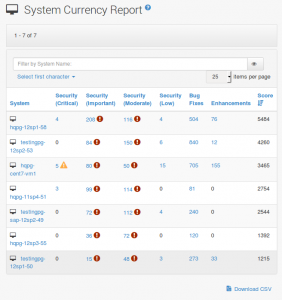

An intuitive GUI interface offers a complete view of the network at a glance, including features (like “Formulas with Forms” – Figure 3), that make SUSE Manager the ideal tool for consistent, highly efficient management of hundreds or thousands of servers. Expert Linux and Unix admins who prefer to work at the command line will find a rich set of text-based commands. The SUSE command-line tool “spacecmd” makes it easy to integrate SUSE Manager functions into admin scripts and homegrown utilities, and SUSE Manager supports Nagios-compatible monitoring with Icinga.

A sensible security system enables you to distribute Linux administration work among the staff according to each employee’s skills and responsibilities. The main administrator of a SUSE Manager server can delegate operations to users at different levels, creating accounts for tasks such as key activation, images, configuration and software distribution.

Figure 3: Salt Formulas can be grouped and applied to single systems or whole groups.

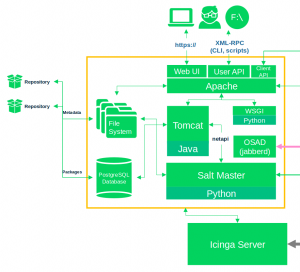

The Open Source Edge

A fully open source development model improves code quality and prevents vendor lock-in. The upstream project for SUSE Manager, Uyuni, is 100 percent open source (Figure 4). The software is developed in the open, on GitHub, with frequent releases and solid, automated testing. Although Uyuni is not commercially supported by SUSE and does not receive the same rigid QA and product lifecycle guarantees, it is not stripped down in any way. Unlike other vendors, whose commercial products heavily rely on extra features not available in the basic, open source version, SUSE keeps the same, full feature set available in both the community-based and subscription-based variants.

Adopting SUSE Manager, or migrating to it, does not mean that you should necessarily renounce your previous configuration management systems. For instance, SUSE Manager can act as an External Node Qualifier (i.e., configuration database) for Puppet or Chef.

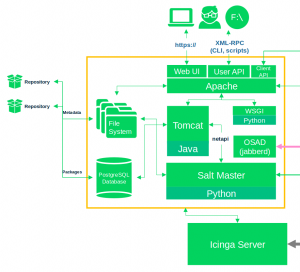

Figure 4: The SUSE Manager architecture – open standards and well-defined, open interfaces.

Salt on the Inside

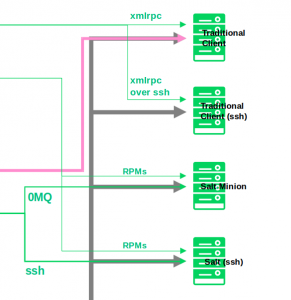

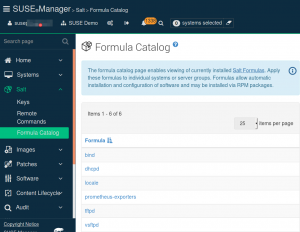

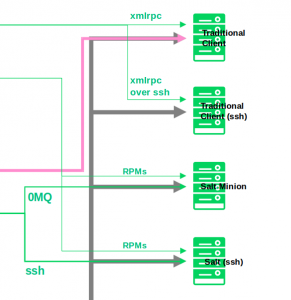

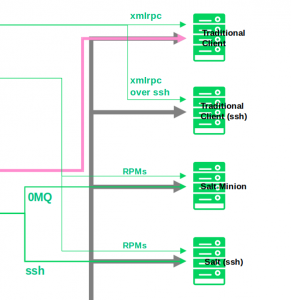

SUSE supports the powerful Salt configuration management system. Salt is state-based. A client agent, known as a Salt “minion,” can find the Salt master without the need for additional configuration (Figure 5). If the client does not have an agent, Salt is capable of acting in “agentless” mode, sending Salt-equivalent commands through an SSH connection. The ability to operate in agent or agentless mode is an important benefit for a diverse network. SUSE Manager 4 also includes Salt-based functions for VM management that can manage hundreds of servers with near real-time efficiency.

SUSE Manager extends the automatic configuration capabilities of Salt through its support for action chains. An action chain makes it possible to use a single command to specify and then execute a complex task that consists of several steps. Examples of chainable actions include rebooting the system (even in between other configuration steps of the same system!), installing or updating software packages, and building system images.

Figure 5: SUSE Manager communicates transparently with both agent-enabled and agentless systems.

Comparing the Alternatives

SUSE Manager is one of several open source tools that inhabit the Linux space. Although the benefits of each depend on the details of your network and the needs of your organization, the following analysis offers a quick look at how SUSE Manager compares with the competition.

SUSE Manager vs. Puppet

The Puppet cross-platform orchestration tool comes in an open source version, as well as in a commercially supported Enterprise edition (which, however, is not entirely open source).

Traditionally, Puppet requires an agent on each client, which adds complexity and additional effort to configure and roll out for new systems. In the original Puppet working mode, changes are not implemented immediately, but only the next time the agent asks the server for an update – that is, after an interval configured by the administrator. Tools like Puppet Tasks and Puppet Bolt, which are included in the latest release(s) of Puppet Enterprise, overcome these limits, but they are still being integrated with the main product. The same applies to Puppet’s open source initiative for cloud orchestration with Kubernetes, called Lyra.

Puppet Enterprise’s native configuration directives require advanced knowledge of the custom Domain Specific Language (DSL). Support for the simpler, more widespread YAML language was added to Bolt in 2019.1 Many of the advanced Puppet features required for full functionality are found in additional modules, either from the official Puppet Forge website or from the larger Puppet Community. Interaction of modules from independent developers can add complication and lead to uncertainty or unpredictability in long-term support. Regardless of module issues, several advanced tasks still demand input from the command line, even in Puppet Enterprise.

Even Puppet’s support for managing bare metal, VMs, containers and cloud instances is more complex than in SUSE Manager, relying on the Razor component, which is included in the Enterprise version.

To summarize, Puppet offers less integration of crucial components, as well as a significantly higher learning curve than SUSE Manager. Puppet users will spend more time configuring the system in order to achieve an equivalent level of functionality.

SUSE Manager vs. Chef

Chef is a cross-platform, open source tool that is also available in a commercial version called Chef Automate. Like Puppet, Chef requires an agent on each node, and the “recipes” used to define client configurations require developer-level knowledge of Ruby-based DSL.

By default, a Chef installation requires an agent on each managed node. The configuration also requires a separate, dedicated machine (called the Chef Workstation). The purpose of the workstation is to host all the configuration recipes, which are first tested on the workstation and then pushed to the central Chef server. A Chef Workstation can apply configuration updates directly over SSH, and the Web interface of Chef Automate supports agentless compliance scans. However, seamless interaction of the Chef server, Chef Workstation and nodes is difficult to understand for beginners and requires a lot of initial setup and preliminary study.

Many of the advanced features required for a comparison with SUSE Manager are only available in the commercial Chef Automate edition. For instance, separate tools for compliance management (InSpec) and application management (Habitat) are only integrated in the commercial version of Chef, whereas these capabilities are fully integrated into the basic, upstream version of SUSE Manager.

SUSE Manager vs. Ansible Automation

The Ansible management tool puts the emphasis on simplicity. Ansible is best suited for small and relatively simple infrastructures. Part of Ansible’s simplicity is that, unlike other similar products, it has no notion of state and does not track dependencies. The tool simply executes a series of tasks and stops whenever it fails or encounters an error. When the administrator provides a playbook (a series of tasks to execute) to Ansible, Ansible compiles it and uses SSH to send the commands to the computers under its control, one at a time. In small organizations, the performance impact is typically unnoticed, but as the size of the network increases, performance can degrade, and in some cases, commands or upgrades may fail.

In general, this stateless design makes it more difficult for Ansible to execute complex assignments and automation steps. Ansible’s playbooks are easier to create and implement than the DSL rules used with Puppet or Chef, but the YAML markup language used with Ansible is not as versatile. And, although Ansible is written in Python, it does not offer a Python API to support advanced customization and interaction with other products. Ansible also does not provide compliance management or a central directory of the systems it manages. The community-driven AWX open source project provides a web interface to Ansible, which is not as mature as those of SUSE Manager.

The commercial version of Ansible, called Ansible Automation, is composed of Ansible Engine and Ansible Tower. Ansible Engine, which is the component that directly acts on the managed systems, is a direct descendant of the open source version, with the same agentless/YAML-based architecture. Ansible Tower is a web-based management interface for Ansible Engine based on selected versions of AWX, hardened for long-term supportability and able to integrate with other services. Some features of Ansible Tower are not available under open source licenses.

SUSE Manager and SaltStack

The SaltStack orchestration and configuration tool comes in an open source edition, as well as through the SaltStack Enterprise commercial version. Like SUSE Manager, SaltStack uses the Salt configuration engine for managing installation and configuration services.

SUSE Manager offers many more features than the open source version of SaltStack. For instance, SUSE Manager supports both state definition and dynamic assignment of configuration via groups through the web interface, as well as offering auditing and compliance features that aren’t available in the open source SaltStack edition.

Like SUSE Manager, SaltStack Enterprise is an enterprise-level management tool based on the Salt configuration engine. In many ways, SaltStack Enterprise is the most similar to SUSE Manager of all the tools described in the paper, so the choice might depend on the details of your environment.

Users who prefer to operate from the command line might prefer SUSE Manager because of its sophisticated command-line interface. And of course, networks with a large investment in SUSE Linux will appreciate SUSE Manager’s tight integration with the SUSE environment. SUSE Manager is also a better choice if your organization depends on SAP/HANA business services.

In other cases, the choice between SUSE Manager and SaltStack Enterprise might depend on cost, the size of your network, what Web interface best matches your workflow and other factors. Keep in mind that SUSE Linux is an ideal platform for supporting SaltStack Enterprise. SaltStack Enterprise is meant to serve as a master of Salt masters, a role that doesn’t conflict with SUSE Manager, so it is very possible for the tools to coexist without conflict.

If you are using SaltStack now and wish to continue to use it, the experts at SUSE can help you with a plan for how to integrate SaltStack with SUSE Manager and the SUSE Linux environment.

Conclusion

SUSE Manager provides a single, full-featured interface for managing and monitoring the whole lifecycle of Linux systems in a diverse network environment, either from an easy graphical interface (Figure 6) or from the command line. You can manage bare metal, virtual systems and container-based systems within the same convenient tool, attending to tasks such as deployment, provisioning, software updates, security auditing and configuration management. The flexible Salt configuration system allows convenient configuration definition and easy automation, and it is capable of acting in agent or agentless mode. In these ways, SUSE Manager greatly reduces the complexity and risks of dealing with highly dynamic Linux infrastructures and operations.

Unlike several of its competitors, SUSE offers the full feature set of SUSE Manager through its upstream, community-based development project Uyuni, thus preventing lock-in, simplifying evaluation and maximizing the benefits of open source development.

Strong support for customization and complex configurations, along with the ease and convenience of a single-source management solution, make SUSE Manager a powerful option for managing Linux systems in a diverse, enterprise environment.

For customers using SAP, dedicated “Formulas with Forms,” together with a new user interface and API, allow easy configuration of SAP HANA nodes, as well as simpler deployment of patch staging environments, without the need for custom scripting.

Talk to the experts at SUSE for more on how you can scale down overhead and scale up efficiency by adding SUSE Manager to your Linux network environment.

Figure 6: The complete status of the network and all the functions to manage its whole lifecycle, in the main panel of SUSE Manager.