As a solution architect in SUSE Global Services, I often hear the following question:

What is the main difference among?

- Hosting an application on a cloud platform

- Hosting an application on a container as a service platform

- Building a simple virtual machine and hosting an application on it

(And, yes, most people describe virtual machines as simple, coming from the fact that they are the original and traditional replacement of having physical servers host applications.)

In this blog post, I will attempt to dispel the confusion among these approaches and provide a roadmap for using them for application transformation. But before we do that, let’s delve into some history.

In the “old days,” applications were always hosted in a traditional way on a physical server or a group of physical servers. However, physical servers are expensive, hard to maintain and hard to grow and scale. That’s when virtual machines (VM) grew in popularity. VMs provided a better way to maintain, grow and scale. That is, they were easier to backup and restore and migrate from one region to another and they were easier to replicate across multiple domains/zones/regions.

This table is a simple high-level comparison between physical servers and virtual machines or servers:

| Point of Comparison |

Physical servers |

Virtual Machine |

| Scalability |

Hard to scale, especially vertical scaling. Hardware gets old and has limited memory, storage and CPU.

For horizontal scaling, we need to buy hardware and ship it to the hosting datacentre and to ensure it was compliant with the datacentre support regulations. |

Easy to scale vertically. We can add new virtual machines as necessary. However, we still have the same horizontal scaling limitations as physical servers. |

| Maintenance |

Hardware gets old and maintenance gets expensive and hard. At a certain point, it must be replaced. When the hardware is replaced, all hosted data and application must be migrated and tested on the new hardware. |

Maintenance is better especially from a hardware perspective. It is the responsibility of the virtual machine provider (i.e., a cloud provider such as AWS or Azure or hosted virtualized environment such as VMWare). |

| Cost |

Very expensive. It is not only about the hardware but as well the facilities needed to operate such hardware, such as the electricity and the cooling of the server. |

Much less expensive. |

| Performance |

Better performance as the full power of the physical hardware is dedicated to the application. That is, the application receives the full power of the underlying hardware. |

It is not as good as the physical server’s capacity. Network bandwidth and all underlying hardware is shared between all running VMs. |

| Footprint |

Large footprint. |

Smaller footprint. The hardware is not owned by the application but rented to the application. |

| Security |

You are in control and charge of hardware and network so advanced security policies can be implemented, for example physical isolation of the network packets. |

Security in the virtualized environments is not as much of a challenge as many people think. However, it is limited as you cannot physically isolate the network, the data communication and storage. You still can implement security on the data and the routing of it. One important thing to keep in mind for VMs is that each VM has its own isolated OS Kernel so the runtime is not shared making it secured. |

| Portability |

Is not portable at all. |

Highly portable, for example a VM can be migrated as a snapshot from one VM provider to another, as well as from one DC to another, it is very easy. |

So with the pros outweighing the cons, the trend becomes to implement VMs. You simply setup a group of VMs and host the application on it. Simple, right? Not so fast! Developers and testers are not using the same setup as production because the licenses for VMs are not free. So, in the end, configurations and the setup of the environments are not the same. Additionally, the time and effort to configure and install the software on a VM is not small.

The World of Containers

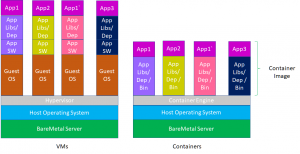

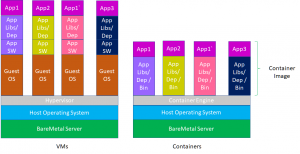

Enter containers. Containers came into the picture and started fixing all those issues so now the developer defines the image he/she wants to place the application on and hands it over to the operator. It is the same image used in testing the application and the same image used in production. So containers solve our problem. They enable consistency. But what about the cost, effort, security and all other aspects, let us do a quick comparison between them.

Here’s our comparison table between VMs and containers:

| Point of Comparison |

Virtual Machine |

Container |

| Scalability |

Easy to scale horizontally but have some limitation on the vertical scaling.

Scalability is expensive because in the public cloud we have to pay for the cost of the VM, while in private or ground we only need to purchase hardware. |

Easy to scale horizontally; no need to scale vertically. Scalability is not expensive. The footprint is small and the containers are sharing the OS runtime. |

| Maintenance |

Maintenance is harder as the IT team must maintain the software running on the machine, the operating system, and install patches. They must also ensure compatibility. |

Maintenance is very simple. The owner of the image is the one responsible for maintaining it. As the image is a light component (that is, it is not a real operating system), maintenance is much easier and efficient. |

| Cost |

Much more expensive than a container. You have to pay for the underlying operating system and its support and patches, as well as the underlying renting cost for the VM. |

Almost zero cost, depending on the software used by the image. No cost of the operating system as it is very light. You are only paying tor licenses and support for the software installed above the operating system. Licenses in this case are much cheaper than the VM because most of the software licensing models are based on VCores/Cores — allowing you to host a number containers above it. |

| Performance |

Better performance as no kernel is shared. Each VM has its own operating system and its own kernel. |

It is very good even though the same kernel is shared in the hosting environment (whether it is a VM or a Bare Metal machine). The main architecture principle of the containerization is that you build a container for the smallest unbreakable component/module in your application. It is not a must to be microservices (MSA) because the aim is to have the ability to make deployments:

- Consistent

- Simpler

- Repeatable

- Easy to scale when needed in a cost efficient manner.

|

| Footprint |

Larger footprint. |

Extremely small footprint. It only hosts what the MSA or the application needs and nothing else. |

| Security |

Better security because no sharing occurs in the kernel; each VM has its own operating system and its own isolated kernel.

|

Security can be a challenge when using containers given that containers are sharing the same kernel. With containers, you cannot physically isolate the network and the data communication and storage. However, you can still implement security on the data and the routing of it.

Potentially the community has started working on building a lightweight VM with a very small footprint like a container but with its isolated runtime and kernel, an example to that is KataContainers. |

VMs vs Containers

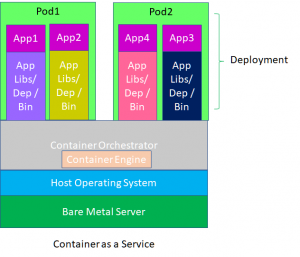

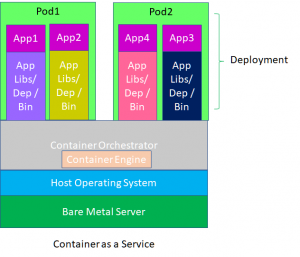

CaasP

Comparing VMs to containers makes containers seem pretty good, right? So now most customers start building small containers rather than building VMs. They are more efficient cost and of high flexibility and it increases the quality of their in-house applications.

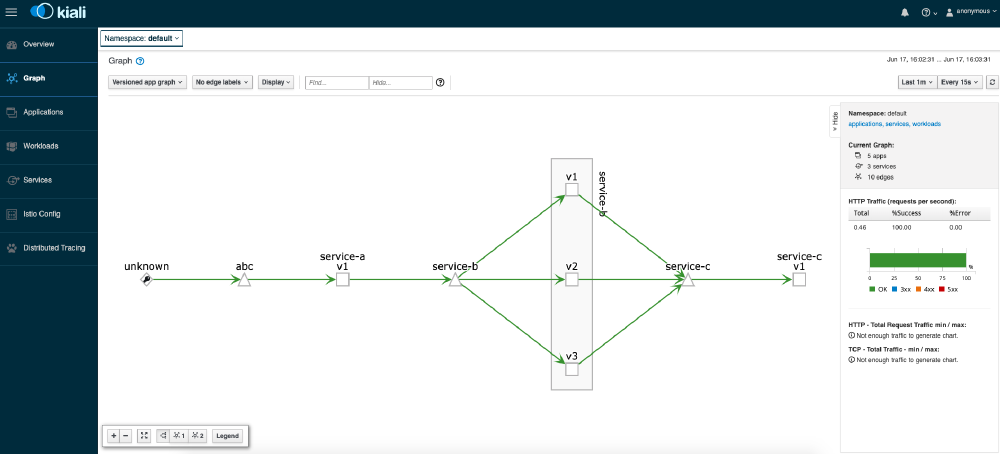

But do containers really solve it all with runtime engine such docker or crio, in conjunction with an orchestrator such as Kubernetes (K8s)? Well, like most things, the answer is, it depends on both the needs and the requirements.

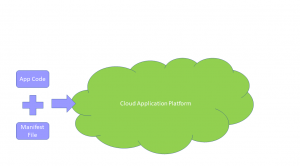

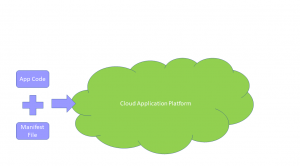

And Along Came Cloud Application Platform

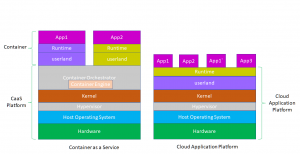

First, let’s talk about the cloud application platform and see a comparison between it and a container as a service.

What is a cloud application platform? The simple answer is that it is a highly advanced PaaS (platform as a service). Ok, you’re thinking, a container as a service is a PaaS. So, how is it different and what is meant by an advanced PaaS?

A cloud application platform offers both a runtime to run an application in an environment where clients don’t care about the runtime hosting the application- – as long as it complies with a set of rules and regulations.

This might seem a bit confusing, so let’s show an example. Let’s assume we have a Java web application running a spring boot application. What are the solutions offered by both platforms and what is RACI of hosting.

| Aspect |

Cloud Application Platform |

Container as a Service |

| What is needed to host the application |

- Create a manifest or a build a file to set all the dependencies and all rules and regulations the platform needs to prepare for the application. In our case, it will host the Java runtime version required and all the services linked to the application for example a used database

- Push the application for deployment using a CD/CI pipeline or a simple command.

|

- Create the image having the Java runtime and all the dependencies

- Create a pod (if it is running on K8s) resembling the deployments

- Define the exposed ports and network setup

- After building all the runtime images/containers/pods, use the orchestrator engine to push it to container as a service using CD/CI or simple CLI command

|

| What the platform does |

- Create a version for the application

- Build the application runtime. In this case, it would be a war. Prepare the required runtime based on the configurations in the manifest file.

- Define all the environment vars required for the application including the backend services.

- Host the application and allow other to communicate with it either using routes or the API Gateway.

- Maintain the application instances and scale out or in when needed.

|

- Create the runtime instance for the developed files describing the target runtime, networking, dependencies, storage and the runtime containers and pods.

- Maintain the running instances and scale out or in when needed.

|

| Responsibilities |

- The Application owner is only responsible for the application code and focusing on its business.

- The Platform owner is responsible for maintaining the runtime and the services offered by the platform such as upgrade of a java runtime. It also maintain and takes care for licences required for the application runtime and consumed services.

An important added value is that the application owner doesn’t really care about the hosting runtime as long as it complies with the application regulation. For example if it is a web application, the application code and owner doesn’t know what web server is running. |

The Application owner is fulling responsible for:

- Images and the maintenance of it – including the installed software as well as the licences

- All deployment scripts to the platform

The platform owner is responsible for maintaining the container and orchestrator runtime and the services offered by the platform — such as logging and monitoring. |

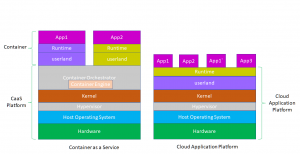

CAP

CaasP vs CAP

Simply put, a cloud application platform is more of a complete PaaS which hosts and supports application runtime. A container as a service is a container runtime and orchestrator which helps client push their images to run on.

You Have Choices

So when do you choose a cloud application platform vs a container as a service? Here are some tips:

- Go toward cloud application platform if you are building a cloud native application as it will leverage the cloud awareness and cloud native design principles

- Go towards cloud application platform if you don’t care about the underlying runtime. That is, you don’t care if the application is running on Apache HTTP server or NGINX web server.

- Go towards cloud application platform if you want to enforce 12-factor principles and cloud application development principles

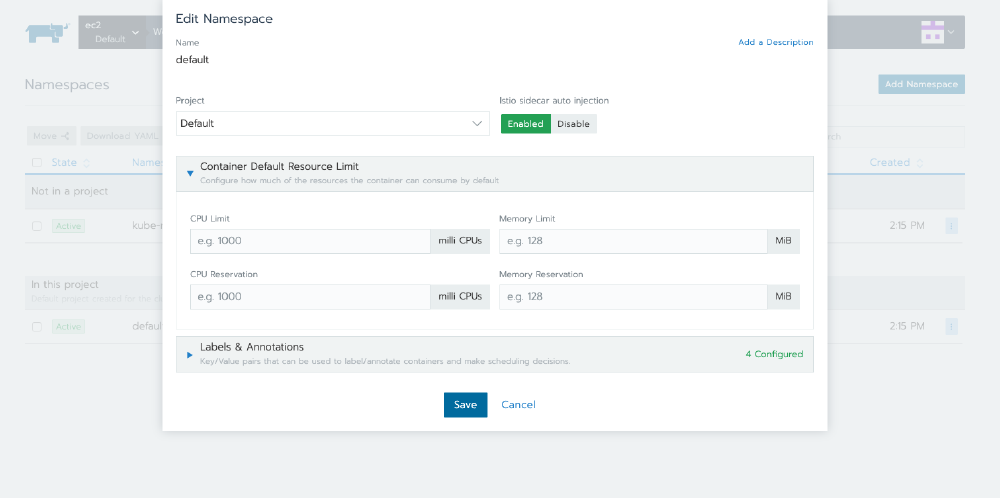

- Go toward container as a service if you want to define your software running the application and its installation setup.

- Go towards container as a service if you want to have better control on the network setup.

Luckily for you, SUSE offers both!

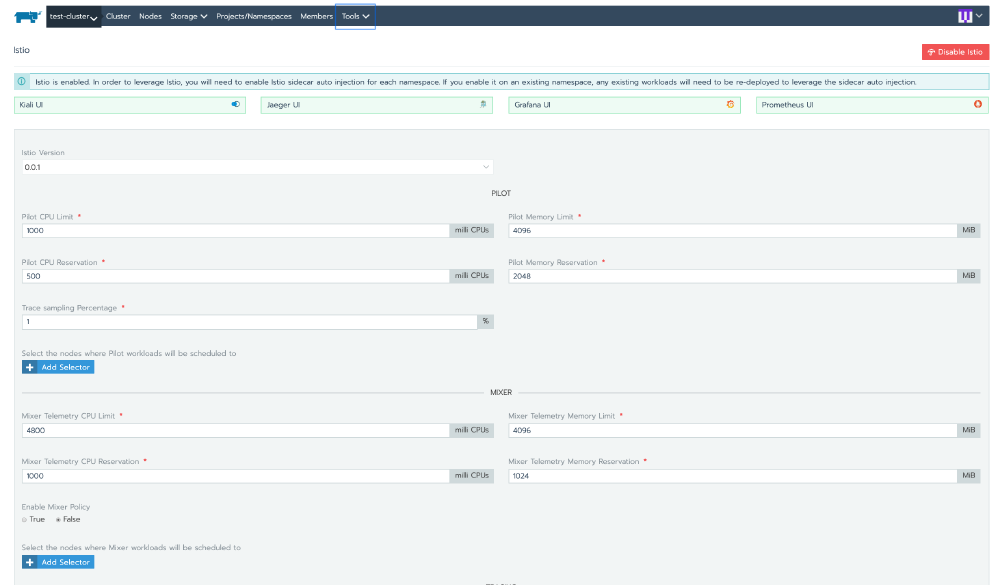

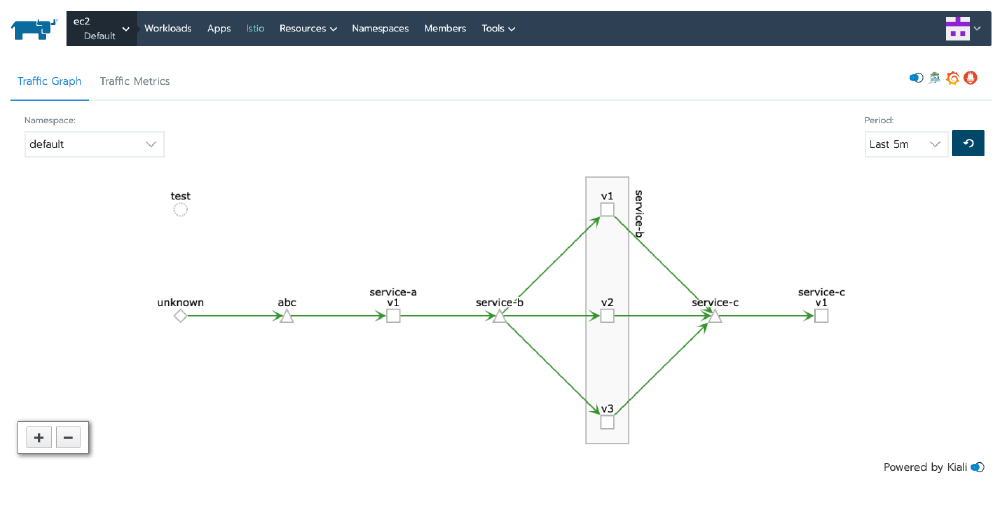

- Container as a service (SUSE CaaS Platform) solution, based on the market leading K8s and supports both docker and cri-o container runtime.

- Cloud application platform (SUSE CAP) solution, based on one of the powerful open source cloud application development platform Cloud Foundry supporting multiple mode of deployments and integration with private cloud, public clouds and ground runtime.

SUSE also offers a variety of professional services offerings to help you on your journey. From confirmation and validation to full blown design, implementation and premium support services, our consultants are ready to be your trusted partners. Learn more at suse.com/services.

If you’ve spent any amount of time watching television in the US in the past few years, no doubt you’ve seen the advertisements for The Olive Garden, boasting “When you’re here, you’re family!” But when was the last time you felt like that when calling on technical support?

If you’ve spent any amount of time watching television in the US in the past few years, no doubt you’ve seen the advertisements for The Olive Garden, boasting “When you’re here, you’re family!” But when was the last time you felt like that when calling on technical support?

Guest blog by Gerd Hagmaier, the Global VP S/4HANA at

Guest blog by Gerd Hagmaier, the Global VP S/4HANA at