How to Protect Against Kubernetes Attacks with the SUSE Container Security Platform

What are common challenges with Kubernetes and container security?

The rise of containers and Kubernetes has transformed application development, allowing businesses to deploy modern apps with unprecedented speed and agility. However, this shift to containerized infrastructure presents a new security landscape with challenges. Unlike traditional applications, containers have expanded attack surface – more potential entry points for malicious actors who are keenly aware of this paradigm shift. To effectively address these evolving threats, security teams require a comprehensive solution that delivers deep visibility within runtime environments and ensures supply chain security for containerized environments with security best practices. Additionally, it’s crucial to equip development teams with the tools to secure the software supply chain from the very beginning.

Many enterprises will find it difficult to build cloud native security expertise in-house. The sheer volume of threats can be overwhelming for in-house security teams. Traditional security professionals may lack the specialized knowledge required to secure these dynamic environments. This lack of qualified personnel can leave organizations vulnerable to sophisticated attacks targeting containerized applications as they struggle to find or train security staff with the necessary expertise. Most organizations need not only a solution but also an expert team that can ensure proper configuration and alignment to the organization’s risk profile to minimize the risk of a breach.

What is NeuVector Prime?

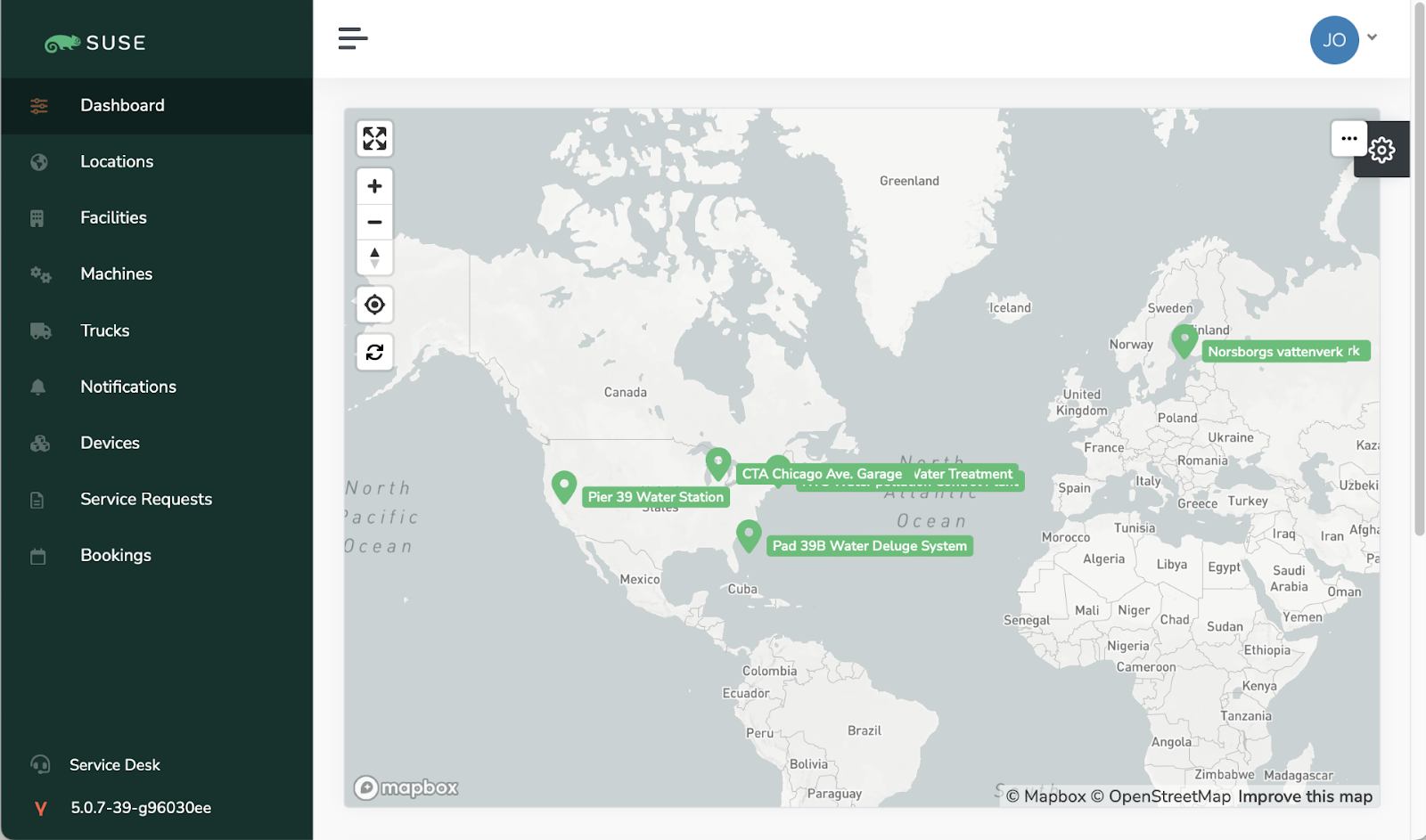

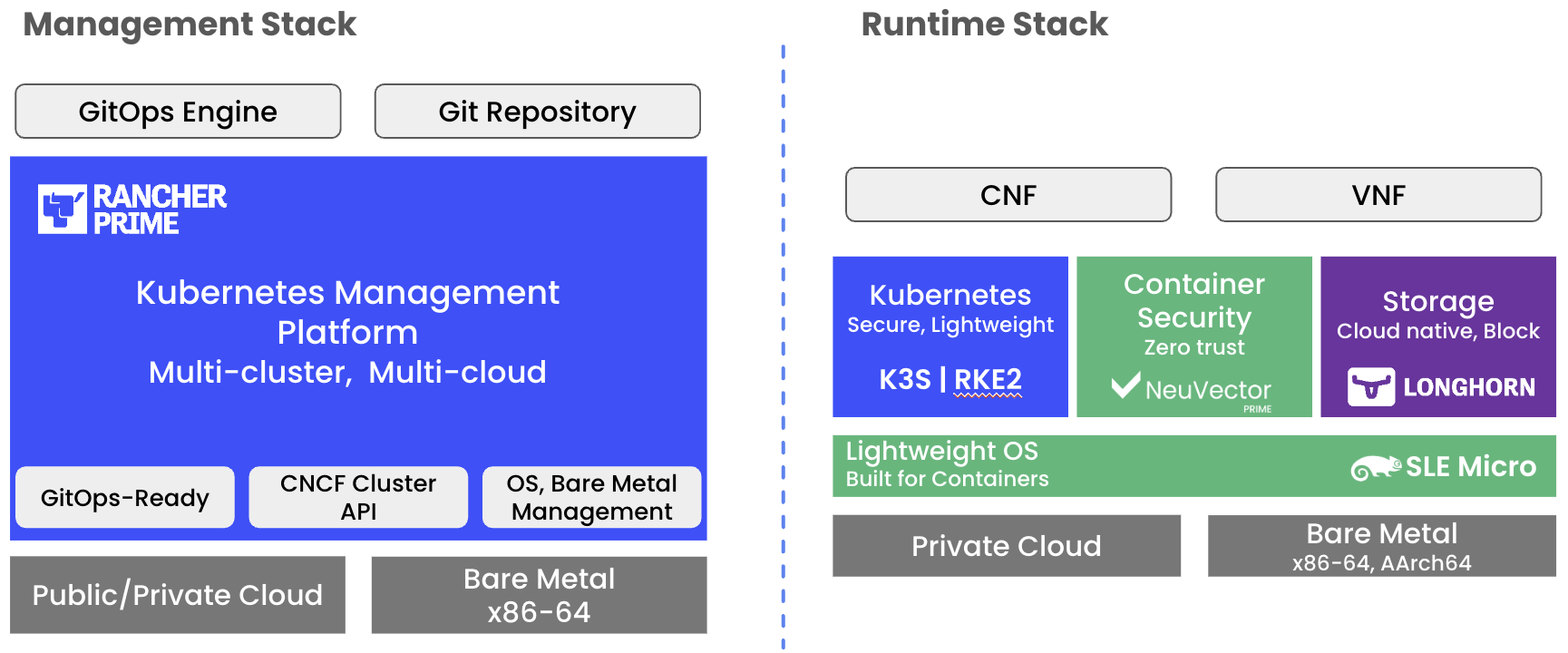

SUSE’s container security platform, NeuVector Prime, is the industry’s only 100% open source, zero trust platform designed for full lifecycle container security. NeuVector Prime leverages its Kubernetes-native architecture to provide unparalleled visibility and control at the cluster layer so attacks can be detected and blocked. NeuVector Prime offers what other container security solutions don’t, such as Deep Packet Inspection (DPI), Layer 7 Firewall Protection, Zero Trust Security, Automated security policies and Data loss prevention (DLP).

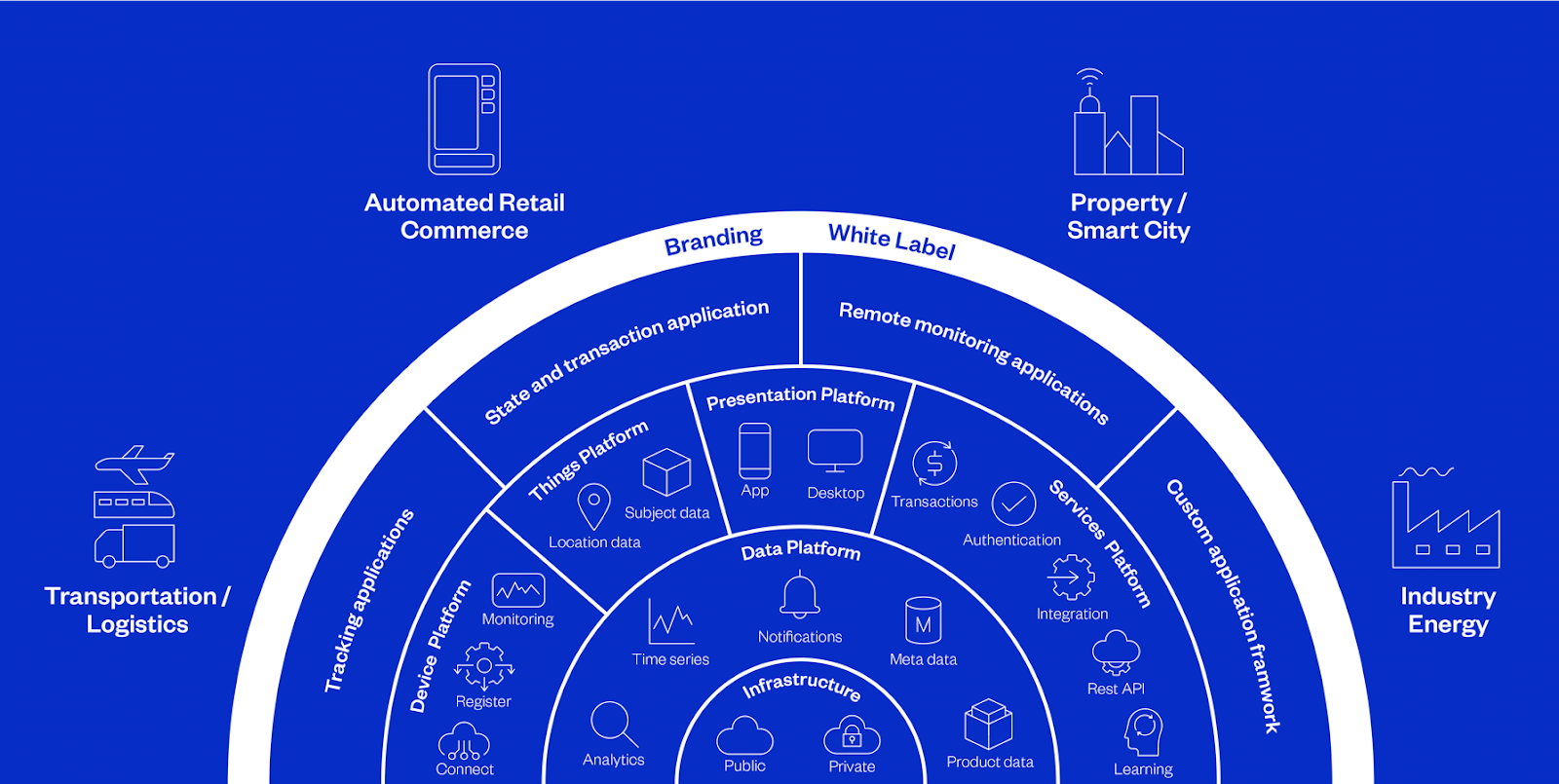

Image 1: NeuVector Prime Dashboard

What are the benefits of NeuVector Prime by SUSE?

Kubernetes-Native:

- NeuVector Prime secures any Kubernetes environment whether using Rancher, Red Hat OpenShift, Tanzu Kuberntes, Amazon EKS, Microsoft AKS, IKS, GKE and regardless of if the environment is air gapped, on-prem.

- Because of the seamless integration with Kubernetes platforms this enables organizations to have a secure and simple deployment of a secure cloud native stack.

- Because NeuVector Prime sits inside of the cluster, it is isolated from the Cloud Service Provider (CSP). This allows the environment to instantly improve the Kubernetes security posture without changing the compliance status of the environment. (i.e. if the environment was FedRAMP compliant before NeuVector Prime, it will remain FedRAMP compliant.)

Infrastructure-as-Code and Security-as-Code capabilities:

- With many organizations adopting hybrid-cloud and multi-cloud environments, NeuVector Prime allows them to move easily from one cloud provider to another without compromising security. These workloads are easier to secure without being dependent on their cloud provider.

- Security policies, access controls, and vulnerability scans are defined within code files, ensuring consistent and automated security implementation across the infrastructure. This aligns security practices with the DevOps workflow, fostering a more collaborative “DevSecOps” approach.

- Automated deployment and enforcement of security policies based on the defined code allow for consistent and repeatable security implementation across container environments.

Extensive visibility for production environments while still securing in-build:

- The NeuVector Prime network protection (firewall) operates at layer 7, where most other container security offerings operate at layer 3 and 4. Layer 7 visibility enables organizations to protect containers against attacks from internal and external networks, including real time identification and blocking of the network, packet, zero-day, and applications attacks like DDoS and DNS. Additionally, this visibility allows organizations to create specific policies around the network that are not possible with other container security solutions.

- Ensure supply-chain security through vulnerability management and image scanning without impacting innovation. DevOps teams can deploy new apps with integrated security policies to ensure they are secured throughout the CI/CD pipeline and into production.

What is the difference between NeuVector and NeuVector Prime?

NeuVector is the name of the open source project. NeuVector Prime is the commercial offering from SUSE used by organizations to secure their Kubernetes environments. It allows customers to get enterprise-level support and add consulting and services from SUSE.

With sensitive data or business-critical workloads, your organization can’t afford to use an unsupported security solution, especially when and if an exploit occurs. NeuVector Prime offers more than support break/fix services. SUSE’s support team can help properly deploy NeuVector and configure the extensive security layers so attackers can be detected and blocked. NeuVector Prime subscribers get access to Advanced Sizing and Planning guides, a Common Vulnerabilities and Exposures (CVE) database lookup service, and access to security templates and rules before they are published to the community. In addition, NeuVector Prime seamlessly integrates with Rancher Prime (and other Kubernetes management platforms) through an integrated UI extension, role-based access control (RBAC) mapping, and other features that enable simple deployment of a ‘secure cloud native stack.’

Specifically, SUSE’s NeuVector Prime customers get:

- SLA backed, Product Support Services, RCA and troubleshooting to make sure NeuVector is configured properly for maximum protection

- Vulnerability (CVE) investigation triage assistance to assist in vulnerability management and remediation efforts

- Best practices, hardening assistance (e.g., segmentation, network and process profiling, admission controls) to assist with all layers of security

- Run-time threat rules configuration optimization. Access to assets and services (e.g., performance tuning, CVE lookups) for deployment planning and scalability

- Built-in, supported native integration with Rancher Manager and Rancher Distributions (e.g., UI extension) to easily deploy and manage a secure cloud native stack

Learn More

To learn more about SUSE’s container security platform, register for the upcoming webinar on all things NeuVector Prime.