手把手教你搭建SUSE CaaS Platform (内有安装视频)

本文是手把手教学系列之一:手把手教你搭建SUSE CaaS Platform,由SUSE技术专家Arthur Yang提供。

SUSE CaaS Platform 是一款企业级容器管理解决方案,可让 IT 和 DevOps 专业人士更轻松地部署、管理和缩放基于容器的应用程序及服务。其中的 Kubernetes 可实现现代应用程序的生命周期管理自动化,还有许多周边技术可以扩充 Kubernetes 并使平台本身易于使用。因此,使用 SUSE CaaS Platform 的企业可以缩短应用程序的交付周期,并提高业务灵活度。

下面手把手演示如何搭建SUSE CaaS Platform。

1. 适用范围

适用于基于SLES交付容器平台的用户等,对SLES有一定了解的用户,对社区kubernetes有一定的用户,以及其他使用SUSE 操作系统的客户。

2. 缩略语

| No | 解释 | |

| SLES | SUSE Linux Enterprise Server | |

| CaaSP | SUSE CaaS Platform | |

3. 配置要求

| No. | 说明 | 备注 |

| 操作系统 | SLES 15 SP1 | |

| Master节点 | 至少2 个CPU,16GB内存,50GB硬盘 | K8s master节点,运行api-server |

| Worker节点 | 至少1个CPU, 16GB内存,50GB硬盘 | K8s worker节点 |

| Management节点 | 至少1个CPU,16GB内存,50GB硬盘 | SUSE CaaS Platform管理节点 |

| 网络 | 1Gb |

4. PoC架构图

一个简单的PoC架构图如下所示:

各节点计划配置情况如下:

| Role | Hardware Specification | Hostname | Network (default) |

| Management | 2 vCPU, 8GB RAM, 50GB HDD, 1 NIC | caasp-mgt.suse.lab | 192.168.64.139/24 |

| Master | 2 vCPU, 8GB RAM, 50GB HDD, 1 NIC | caasp-master.suse.lab | 192.168.64.140/24 |

| Worker | 2 vCPU, 4GB RAM, 50GB HDD, 1 NIC | caasp-worker-001.suse.lab | 192.168.64.141/24 |

5. 安装节点

5.1. 系统安装

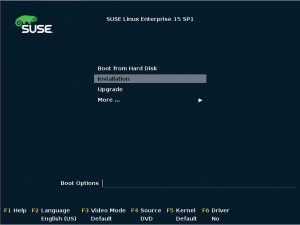

- 使用光盘或移动硬盘引导

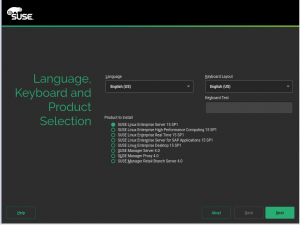

- 选择产品

选择SUSE Linux Enterprise Server 15 SP1

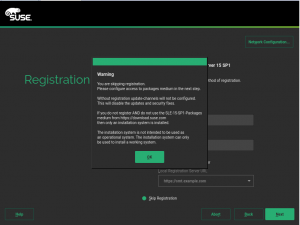

- 跳过注册步骤

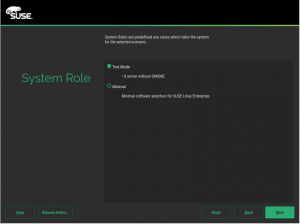

- 选择系统类型

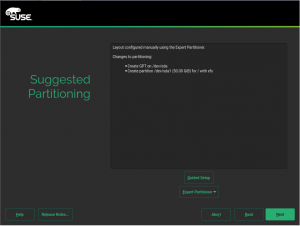

- 分区

注意不要使用btrfs文件系统,不要添加swap分区

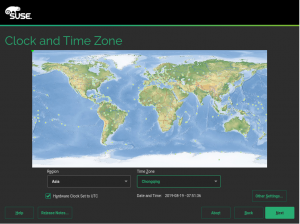

- 选择时区

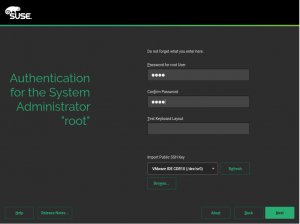

- 创建用户

可以跳过普通用户创建,直接给root用户设置密码

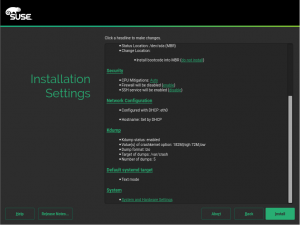

- 禁用防火墙

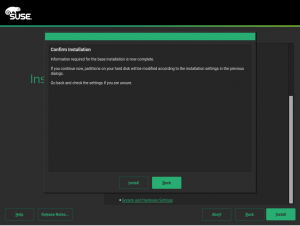

- 开始系统安装

5.2. 节点配置

以下操作在所有节点上进行。

- 配置网络

安装完成后通过yast2配置网络,包括网关和dns,确保每台服务器都可以连接外网

- 注册产产品

# 激活SLES15-SP1订阅

SUSEConnect -r <reg-code>

# 配置containers 模块

SUSEConnect -p sle-module-containers/15.1/x86_64

# 激活CaaSP4.0订阅

SUSEConnect -p caasp/4.0/x86_64 -r <reg-code>

注册成功后,repo情况如下:

- 激活ip转发

在/etc/sysctl.conf中增加或者修改如下:

| net.ipv4.ip_forward = 1 |

- 编辑hosts文件

| 192.168.64.139 caasp-mgt.suse.lab caasp-mgt

192.168.64.140 caasp-master.suse.lab caasp-master 192.168.64.141 caasp-worker-001.suse.lab caasp-worker-001 |

- 更新系统

| zypper up |

- 检查machine-id是否相同

检查方法

| cat /etc/machine-id |

如果master,worker的machine-id相同,则通过如下方式生成新的machine-id:

| rm -f /etc/machine-id

dbus-uuidgen –ensure=/etc/machine-id |

5.3. Management节点配置

- ssh互信

| ssh-keygen

ssh-copy-id root@192.168.64.140 ssh-copy-id root@192.168.64.140 |

- ssh-agent

| eval “$(ssh-agent)”

ssh-add /root/.ssh/id_isa |

- 安装管理包

| zypper in -t pattern SUSE-CaaSP-Management |

| #上述命令会安装如下包:

helm kubernetes-client kubernetes-common libscg1_0 libschily1_0 mkisofs patterns-base-basesystem patterns-caasp-Management skuba terraform terraform-provider-local terraform-provider-null terraform-provider-openstack terraform-provider-template terraform-provider-vsphere zisofs-tools 安装两个pattern: SUSE-CaaSP-Management basesystem |

6. 初始化集群

以下操作,都在management上进行

6.1. 初始化集群

| skuba cluster init –control-plane 192.168.64.140 my-cluster

cd my-cluster skuba node bootstrap –target <IP/FQDN> <NODE_NAME> |

6.2. 初始化master节点

| cd my-cluster

skuba node bootstrap –target 192.168.64.140 caasp-master |

#输出如下代表此命令成功:

| [bootstrap] successfully bootstrapped node “master” with Kubernetes: “1.16.2” |

6.3. 将worker节点加入集群

| skuba node join –role worker –target 192.168.64.141 caasp-worker-001 |

7. 测试集群是否正常

以下操作都在management上进行

7.1. 配置kubectl配置文件

#确认/root/.kube是否存在,如果不存在,则创建

| mkdir ~/.kube

cp /root/my-cluster/admin.conf ~/.kube/config |

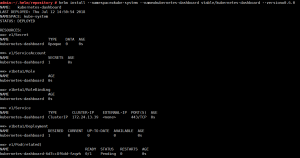

7.2. 验证集群状态

#kubectl查看

| kubectl cluster-info |

#skuba

| cd my-cluster

scuba cluster status |

8. 安装Kubernet图形管理界面

8.1. 下载helm

一般系统里已自带helm,请先使用如下方式安装helm,

| zypper install helm |

如果无法安装则使用如下的方式。

| https://github.com/helm/helm/releases

解压: tar zxvf helm-v2.14.2-linux-amd64.tar.gz cp helm /usr/local/bin/helm |

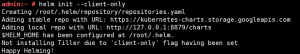

初始化:

helm init –client-only

helm init

如果发现镜像下载慢或无法下载需要手工编辑pods

kubectl –namespace kube-system edit pods tiller-deploy-65867875cb

将gcr.io换成gcr.azk8s.cn即可

8.2. 添加国内安装源

#登录到admin节点:

helm init –client-only

(备注:如果这一步执行不成功,可直接vi /root/.helm/repository/repositories.yaml产生一个空文件,再执行这条命令)

# 先移除原先的仓库

helm repo remove stable

# 添加新的仓库地址

helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

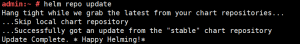

# 更新仓库

helm repo update

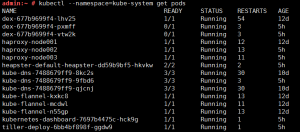

8.3. 安装heapster和kubernetes-dashboard

helm install –name heapster-default –namespace=kube-system stable/heapster –version=0.2.7 –set rbac.create=true

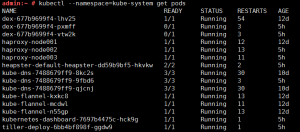

输入kubectl –namespace=kube-system get pods,检查是不是包含pod name: heapster-default-heapster

helm search |grep kubernetes-dashboard

![]()

helm install –namespace=kube-system –name=kubernetes-dashboard stable/kubernetes-dashboard –version=0.6.0

输入kubectl –namespace=kube-system get pods,获取检查是不是包含pod name: kubernetes-dashboard-7697b4475c-hck9g

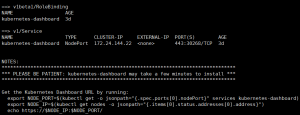

8.4. kubernetes-dashboard更新端口

helm upgrade kubernetes-dashboard stable/kubernetes-dashboard –set service.type=NodePort

kubectl –namespace=kube-system describe pods kubernetes-dashboard-xxxxx

kubectl get pods –all-namespaces -o wide

通过以下查看kubernetes-dashboard运行在哪个节点上,记录节点IP。

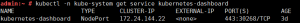

kubectl -n kube-system get service kubernetes-dashboard

记录下这个30268这个端口号。

8.5. 打开图形管理界面

浏览器打开前面记录的IP和端口号:

将之前下载的kubeconfig上传到admin节点上。

使用令牌登录请在admin节点上进行如下操作(切记浏览器不要保存密码)

grep “id-token” kubeconfig |awk “{print $2}”

id-token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImU0NjY4OWQ3MDBmNmE2OGI5ZTllNzEyZTliNjNlZjEzMTI5MTcyYzIifQ.eyJpc3MiOiJodHRwczovL2RvY2tlcjEubGFuLmNvbTozMjAwMCIsInN1YiI6IkNqSjFhV1E5WVdKalpGOXdhVzVuTEc5MVBWQmxiM0JzWlN4a1l6MXBibVp5WVN4a1l6MWpZV0Z6Y0N4a1l6MXNiMk5oYkJJRWJHUmhjQSIsImF1ZCI6Imt1YmVybmV0ZXMiLCJleHAiOjE1MzE0ODIyMjUsImlhdCI6MTUzMTM5NTgyNSwiYXpwIjoidmVsdW0iLCJub25jZSI6ImRmMWNiOTJjNTVlM2I1MWNkNWU5NTA1NjJkMTRlMzY2IiwiYXRfaGFzaCI6Ikptdmhibkpod1g4M3BJQzZNelVWSXciLCJlbWFpbCI6ImFiY2RfcGluZ0AxNjMuY29tIiwiZW1haWxfdmVyaWZpZWQiOnRydWUsImdyb3VwcyI6WyJBZG1pbmlzdHJhdG9ycyJdLCJuYW1lIjoiQSBVc2VyIn0.rA5xBV0EY-QejxPKG9gzjqoLHlLcgvysu6ShByLKM98SIm4qsBy8UDnLsxYL38RMN7ZYge_IIibZrspFt0V8LJ2dIAh3riLqtVD_2BYXuajLTO2a5OMwFyMtQCgM7GGd_3aFBypHsJMHl1TNgr39cHALTBbWJ9mCxS43ZSar1jQ3xCaRR9v-pY1qMb_MU_hpJ4olFjpRTCyYWEpsi6OeNRwrVWnk_Xampq7G3LgiN-esNMSSRDZqo0dKK-wwTiIibhCzHtCeTy3jxXlbL1bBDbsKuHGVVcnjsqAGlorwW_ZrX-JrLVmcKZgafFyNKmqr0Lnmauhx8HnLj0qZMk0-MA

9. 测试用例

9.1. WordPress应用部署

我们将会把一个简单的WordPress+MySQL的应用部署至CaaSP平台中,具体步骤如下。

创建目录:

| cd /root/

mkdir /root/wordpress_example |

生成kustomization文件

| cat <<EOF >./kustomization.yaml

secretGenerator: – name: mysql-pass literals: – password=YOUR_PASSWORD EOF |

编辑mysq.yaml文件

| apiVersion: v1

kind: Service metadata: name: wordpress-mysql labels: app: wordpress spec: ports: – port: 3306 selector: app: wordpress tier: mysql clusterIP: None — apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-pv-claim labels: app: wordpress spec: storageClassName: default accessModes: – ReadWriteOnce resources: requests: storage: 20Gi — apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2 kind: Deployment metadata: name: wordpress-mysql labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: mysql strategy: type: Recreate template: metadata: labels: app: wordpress tier: mysql spec: containers: – image: mysql:5.6 name: mysql env: – name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysql-pass key: password ports: – containerPort: 3306 name: mysql volumeMounts: – name: mysql-persistent-storage mountPath: /var/lib/mysql volumes: – name: mysql-persistent-storage persistentVolumeClaim: claimName: mysql-pv-claim |

编辑wordpress.yaml

| apiVersion: v1

kind: Service metadata: name: wordpress labels: app: wordpress spec: ports: – port: 80 selector: app: wordpress tier: frontend type: LoadBalancer — apiVersion: v1 kind: PersistentVolumeClaim metadata: name: wp-pv-claim labels: app: wordpress spec: storageClassName: default accessModes: – ReadWriteOnce resources: requests: storage: 20Gi — apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2 kind: Deployment metadata: name: wordpress labels: app: wordpress spec: selector: matchLabels: app: wordpress tier: frontend strategy: type: Recreate template: metadata: labels: app: wordpress tier: frontend spec: containers: – image: wordpress:4.8-apache name: wordpress env: – name: WORDPRESS_DB_HOST value: wordpress-mysql – name: WORDPRESS_DB_PASSWORD valueFrom: secretKeyRef: name: mysql-pass key: password ports: – containerPort: 80 name: wordpress volumeMounts: – name: wordpress-persistent-storage mountPath: /var/www/html volumes: – name: wordpress-persistent-storage persistentVolumeClaim: claimName: wp-pv-claim |

创建可用的mysql pv

| Kubectl create –f mysql-pv.yaml |

mysql-pv.yaml文件内容如下:

| apiVersion: v1

kind: PersistentVolume metadata: name: mysql labels: type: local spec: storageClassName: default capacity: storage: 20Gi accessModes: – ReadWriteOnce hostPath: path: “/mnt/wordpress” |

创建可用的wordpress pv

| kubectl create –f wordpress.yaml |

wordpress.yaml文件内容:

| apiVersion: v1

kind: PersistentVolume metadata: name: wordpress labels: type: local spec: storageClassName: default capacity: storage: 20Gi accessModes: – ReadWriteOnce hostPath: path: “/mnt/wordpress” |

将上述文件添加至kustomization

| cat <<EOF >>./kustomization.yaml

resources: – mysql-deployment.yaml – wordpress-deployment.yaml EOF |

valide

| cd /root/wordpress_example

kubectl apply -k ./ |

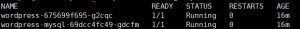

查看服务是否部署成功

| kubectl get pods

|

9.2. 对接SUSE Enterprise Storage

9.2.1. 安装ceph-common

所有caasp节点安装ceph-common

| zypper -n in ceph-common |

复制ceph.conf到worker节点上

| scp admin:/etc/ceph/ceph.conf /etc/ceph |

9.2.2. Ceph集群配置

在ceph集群中,创建池caasp4

| ceph osd pool create caasp4 128 |

在ceph集群中,创建key,并存储到/etc/ceph中

| ceph auth get-or-create client.caasp4 mon ‘allow r’ \ osd ‘allow rwx pool=caasp4’ -o /etc/ceph/caasp4.keyring |

在ceph集群中,创建 RBD 镜像,2G

| rbd create caasp4/ceph-image-test -s 2048 |

查看ceph集群key信息,并生成基于base64编码的key

| # ceph auth list

……. client.admin key: AQA9w4VdAAAAABAAHZr5bVwkALYo6aLVryt7YA== caps: [mds] allow * caps: [mgr] allow * caps: [mon] allow * caps: [osd] allow * ……. client.caasp4 key: AQD1VJddM6QIJBAAlDbIWRT/eiGhG+aD8SB+5A== caps: [mon] allow r caps: [osd] allow rwx pool=caasp4 |

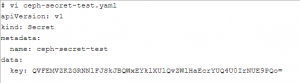

对key进行base64编码

| # echo AQD1VJddM6QIJBAAlDbIWRT/eiGhG+aD8SB+5A== | base64 QVFEMVZKZGRNNlFJSkJBQWxEYklXUlQvZWlHaEcrYUQ4U0IrNUE9PQo= |

9.2.3. 在caasp集群中创建资源

在master节点上,创建secret资源,插入base64 key

| # kubectl create -f ceph-secret-test.yaml

secret/ceph-secret-test created

# kubectl get secrets ceph-secret-test NAME TYPE DATA AGE ceph-secret-test Opaque 1 14s |

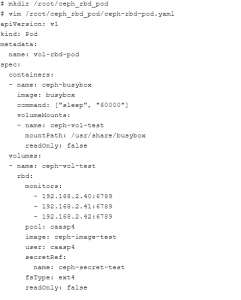

创建pod,并使用存储卷

查看pod状态和挂载

| # kubectl get pods

NAME READY STATUS RESTARTS AGE vol-rbd-pod 1/1 Running 0 22s

# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES vol-rbd-pod 1/1 Running 0 27s 10.244.2.59 worker02 <none> <none> |

| # kubectl describe pods vol-rbd-pod

Containers: ceph-busybox: ….. Mounts: # 挂载 /usr/share/busybox from ceph-vol-test (rw) /var/run/secrets/kubernetes.io/serviceaccount from default-token-4hslq (ro) ….. Volumes: ceph-vol-test: Type: RBD (a Rados Block Device mount on the host that shares a pod’s lifetime) CephMonitors: [192.168.2.40:6789 192.168.2.41:6789 192.168.2.42:6789] RBDImage: ceph-image-test # RBD 镜像名 FSType: ext4 # 文件格式 RBDPool: caasp4 # 池 RadosUser: caasp4 # 用户 Keyring: /etc/ceph/keyring SecretRef: &LocalObjectReference{Name:ceph-secret-test,} ReadOnly: false default-token-4hslq: Type: Secret (a volume populated by a Secret) SecretName: default-token-4hslq Optional: false ….. |

在worker节点上查看映射

| caasp-worker-001:~ # rbd showmappedid pool namespace image snap device 0 caasp4 ceph-image-test – /dev/rbd0 |

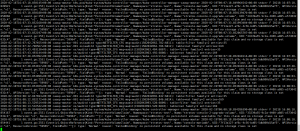

9.3. 集中式日志

Caasp4.0支持日志做集中收集,相关日志有:

Kubelet,crio, API Server, Controller Manager, Scheduler,cilium, kernel, audit,network, pods等。

测试如下:

确保helm的版本是否为2.x,比如2.16.1,以及安装tiller。

创建tiller对应的sa

| kubectl create serviceaccount –namespace kube-system tiller |

创建tiller对应的RBAC

| kubectl create clusterrolebinding tiller –clusterrole=cluster-admin –serviceaccount=kube-system:tiller |

部署tiller

| helm init –tiller-image registry.suse.com/caasp/v4/helm-tiller:2.14.2 –service-account tiller |

部署rsyslog-server,以SLES-15-SP1为例

| 1. zypper in rsyslog

2. vim /etc/rsyslog.d/remote.conf Uncomment $ModLoad imudp.so and $UDPServerRun 514 3. rcsyslog restart 4. netstat -alnp | grep 514 |

部署central-agent

| # helm install suse-charts/log-agent-rsyslog –name central-agent –set server.host=192.168.64.139 –set server.port=514 –set server.protocol=UDP

NAME: central-agent LAST DEPLOYED: Wed Feb 19 00:04:53 2020 NAMESPACE: default STATUS: DEPLOYED

RESOURCES: ==> v1/ClusterRole NAME AGE central-agent-log-agent-rsyslog 0s

==> v1/ClusterRoleBinding NAME AGE central-agent-log-agent-rsyslog 0s

==> v1/ConfigMap NAME AGE central-agent-log-agent-rsyslog 0s central-agent-log-agent-rsyslog-rsyslog.d 0s

==> v1/DaemonSet NAME AGE central-agent-log-agent-rsyslog 0s

==> v1/Pod(related) NAME AGE central-agent-log-agent-rsyslog-6lskq 0s central-agent-log-agent-rsyslog-nxr86 0s

==> v1/Secret NAME AGE central-agent-log-agent-rsyslog 0s

==> v1/ServiceAccount NAME AGE central-agent-log-agent-rsyslog 0s

==> v1beta1/PodSecurityPolicy NAME AGE central-agent-log-agent-rsyslog 0s

NOTES: Congratuations! You’ve successfully installed central-agent-log-agent-rsyslog. |

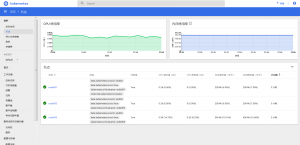

查看rsyslog上的日志:

手把手教你搭建SUSE CaaS Platform视频:

https://v.qq.com/x/page/c3069u57y44.html

kubectl get services

kubectl get services

No comments yet